Aiming to realize a user-friendly, high-speed AI system

As the world is heading for digital transformation (DX), we are seeing an explosive growth in demand for analyzing a large amount of data, which is collected from various systems to find new value, as well as for its high-speed processing. Considering only the amount of calculation required for AI systems, since 2012 when Deep Learning represented by AlexNet appeared, it has swollen by 300, 000 times.

On the other hand, the possibility for improvement in computing power by semiconductor microfabrication is becoming less and less. In order to address the continuously increasing computing needs, in addition to microfabrication, new approaches are invented such as many-core processors and domain-specific computing. However, in order to reap these benefits, we also need high levels of software technologies such as parallel processing and dedicated languages. And, they can only be handled by a limited number of experts.

For example, in April 2019, Fujitsu announced the world’s fastest Deep Learning technology to shorten the learning speed of deep neural network for image recognition by 30 seconds. This remarkable achievement was made by highly skilled experts, after tuning parameters for a long time and repeated trial and error.

Yukito Tsunoda, who is one of the founding members of this project and belongs to Advanced Computer Systems Project in ICT Systems Laboratory says:

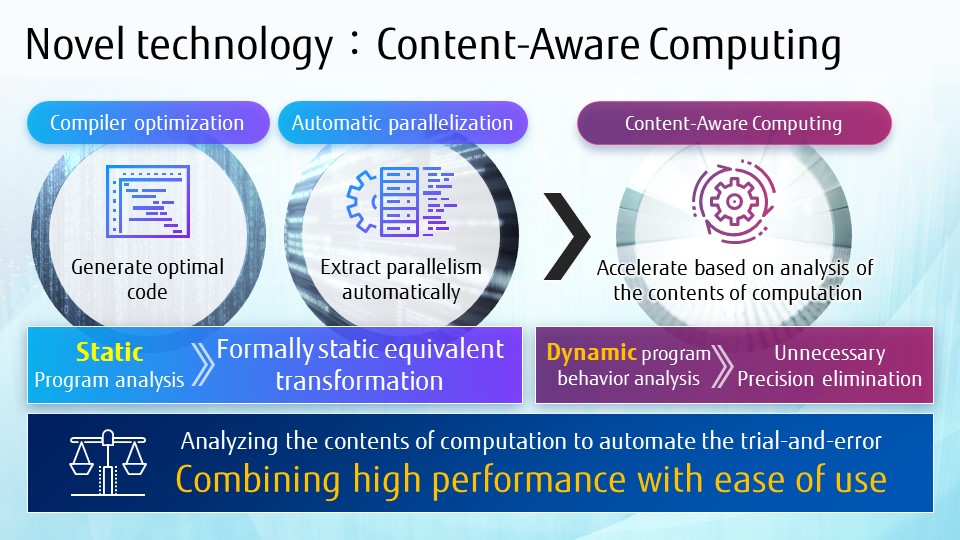

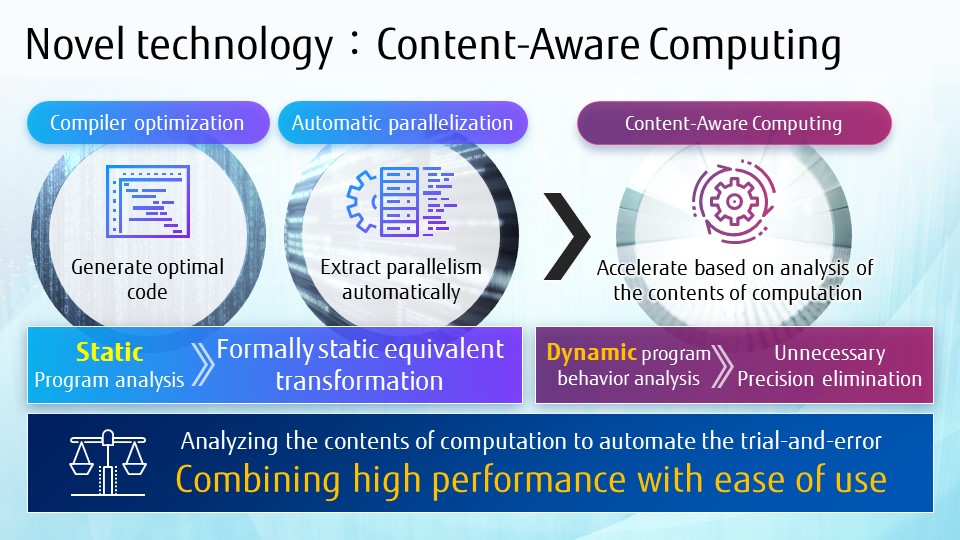

“An ideal computer that we are looking for is one that enables everyone to process data at high speed without the help of an expert ― this concept was the basis for us to start the R&D on ‘Content-Aware Computing,’ which is a new technology to increase computing speed for not only AI-based systems but all kinds of computing systems.”

“Complicated and user-unfriendly technology does not satisfy the requirements for high-speed computers. Considering the user needs, we tried to implement a technology that realizes not only high-speed processing but also ease of use.” (Tsunoda)

Two key technologies for increasing computing speed

As an example of conventional technologies to improve neural networks’ computing performance, there is a method to reduce the operational precision from the ordinary 32 bits to 8 bits to increase the computing speed by 4 times through parallel processing. However, if the operational precision is reduced uniformly in all layers of a neural network, the calculation accuracy cannot be assured. Therefore, it was necessary for an expert to adjust the operational precision of each layer manually. Our first goal in the development of “Content-Aware Computing” is to automate this bit-width adjustment task. The person in charge of this work is Yasufumi Sakai, a researcher belonging to the Platform Innovation Project. After joining Fujitsu Laboratories, he was engaged in designing of supercomputer’s data communication circuits, etc. Then, he studied in the U.S. about Deep Learning and bit-width reducing technologies.

“With the current technology to increase computing speed, the bit width is reduced only for the previously determined layers, and it is difficult to identify the target layers where bit width should be actually reduced. This considerable expert work to estimate various cases, on the basis of trial and error. In order to solve this issue, we developed a new “Bit-width reduction technology,” which checks the content of calculation target data during system operation and automatically controls the bit width, for example 32 bits for the layers where data is widely distributed and 8 bits for the layers where data distribution becomes narrower. I came up with this idea through my experience in circuit designing, where it was a general feedback control method. (Sakai)

Another one is a “Synchronous mitigation” technology. In the parallel processing of deep learning, when the processes of all nodes finish, the learning results are aggregated, and the learning information is updated. Learning and result data aggregation is repeated until the system produces the required learning accuracy. However, in a cloud or parallel environment, due to network conflicts for example, the responses on some nodes are temporarily delayed. If the system waits for the processing of these nodes, the total system performance significantly deteriorates. If the system aborts the processing on the nodes with a delayed response, the total system processing becomes faster. On the other hand, the learning effects per learning cycle lowers, which requires the increase in the total number of learning cycles to obtain the necessary learning accuracy. For this issue, Masahiro Miwa in the Platform Innovation Project, who has been engaged in supercomputer development, proposed an impressive method.

“Dynamically predicting the influence on the processing time reduction and calculation result in case the processing is terminated on the way, we have developed a ‘synchronous mitigation technology’ automatically to adjust and control the operation termination time within the range that does not worsen the calculation results.” (Miwa)

“Content-Aware Computing” increased the AI processing speed by fusing the two technologies for “Bit-width reduction” and “Synchronous mitigation.” Our verification results using a supercomputer show that we could successfully increase the processing speed by about 3 times through the newly developed bit-width reduction technology, and about 3.7 times through the synchronous mitigation technology, and the overall AI processing time became up to 10 times faster. In addition, as it is possible automatically to adjust the calculation accuracy according to the needs by checking the details of processing target data such as its distribution status and required processing time, it enables even nonexperts to improve computing performance, which previously relied just on experts.

“Content-Aware Computing” for improving both performance and usability

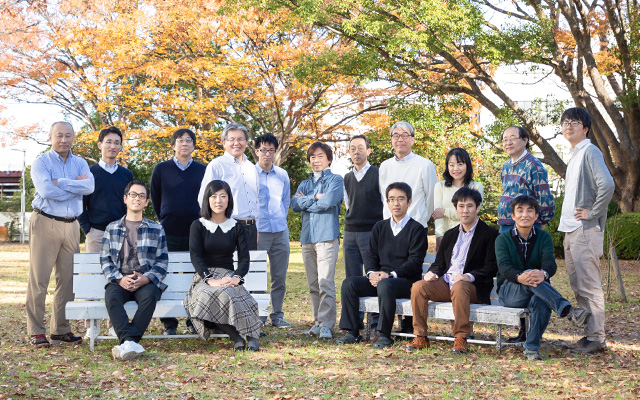

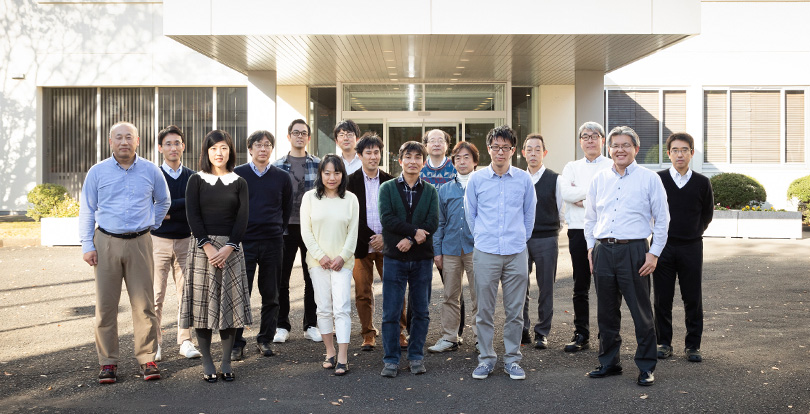

Gathering multi-skilled specialists for this project

Undergoing these steps, Content-Aware Computing was announced as a novel technology in Oct. 2019. At Fujitsu Laboratories, we are now exploring the applicable fields of this technology while repeating functional tests and improvements. Hong Gao in the Advanced Computer Systems Project, who had been previously engaged in circuit designing, etc. is undertaking this role.

“In order to solve social issues such as a low birth rate and aging population, the need for technologies to speed up AI system processing used for nursing-care robots and self-driving vehicles will increase. We think that “Content-Aware Computing” can be applied to these new services and DX applications, and can contribute to society. However, in order to use it for actual business, we have to clear further hurdles such as to make it more user-friendly. We will address these problems one by one from now on.” (Gao)

At present, there are about 10 members working on this project for the practical use of this technology. The project is being advanced by Sakai, who has an extensive knowledge of both circuit design and deep learning; Miwa, who is a specialist in supercomputer system communications; as well as others who are experts in GPU, compilers, deep learning frameworks and other relevant fields. Tsunoda says, “All of these specialists are exchanging their ideas and advancing project activities.”

As an aside, both Tsunoda and Gao were researchers that primarily specialized in hardware design (such as semiconductor devices). However, they say that they can make use of their former experience and knowledge for this project. Tsunoda continues, “Speaking from my experience in device design and simulation, I think we will be able to use AI for these purposes, and I’m looking forward to pursuing these methods.” Gao also adds, “I joined this project last April and started to learn Deep Learning. Therefore, I’m still a beginner. However, I think I can take an approach based on my experience in circuit designing.”

Deployment opportunities on various platforms, by improving usability

Our final goal in the development of “Content-Aware Computing” is to increase the calculation speed while keeping the required accuracy and, to establish user-friendliness. To achieve this goal, we are trying various methods.

“We would like to keep close contact with Fujitsu’s Business Units and external research institutions to continue exploring new methods. Going forward, we would like to use this Content-Aware Computing technology for various cloud services and diverse platforms such as Fujitsu’s platform products.” (Tsunoda)

Sakai also comments. “Although the R&D on AI itself is actively being promoted by Fujitsu Labs’ Artificial Intelligence Laboratory, we believe that our role is to support its evolution by speeding up computer processing. We will endeavor to overcome the limitations of computing technology and continue the R&D on Content-Aware Computing that will support technological development in the AI era.”