Researcher Interviews

Confronting GPU Shortages head on: The dynamics behind the development of the AI computing broker

JapaneseThere has been a surge in demand for GPUs, the processors best suited to AI workloads, as a direct result of the intensifying competition in AI development, particularly with generative AI. To address the global GPU shortage, Fujitsu launched the "AI computing broker" technology in October 2024, as our direct answer to maximizing GPU utilization in AI processing. The AI computing broker dynamically allocates GPUs to AI applications in real-time, managing assignments at a granularity finer than entire application executions. This significantly reduces the number of GPUs required for AI processing, contributing to lower power consumption, as well as reduced AI development and operational costs. In this article, we interview four members of the AI computing broker research team, delving into their ongoing trial experiments and the challenges they faced in developing this technology.

Published on April 10, 2025

RESEARCHERS

-

Yoshifumi Ujibashi

Principal Researcher

Computing Workload Broker Core Project

Computing Laboratory

Fujitsu Research

Fujitsu Limited -

Akihiro Tabuchi

Principal Researcher

Computing Workload Broker Core Project

Computing Laboratory

Fujitsu Research

Fujitsu Limited -

Kouji Kurihara

Researcher

Computing Workload Broker Core Project

Computing Laboratory

Fujitsu Research

Fujitsu Limited -

Godai Takashina

Researcher

Computing Workload Broker Core Project

Computing Laboratory

Fujitsu Research

Fujitsu Limited

AI computing broker: An innovative approach to addressing GPU shortages and power consumption issues

What is the purpose behind the development of the AI computing broker?

Yoshifumi: The world is currently experiencing intensified competition in AI development. The demand for GPUs, essential for AI processing, has surged, leading to a severe GPU shortage. Prices have skyrocketed, and procurement takes considerable time. Even with cutting-edge technology, GPU shortages can delay development or even prevent the establishment of necessary AI processing environments. In addition, AI’s power consumption is projected to reach 10% of global electricity demand by 2030, raising serious environmental concerns. The power consumption issue is so critical that some companies are even considering adopting nuclear power. Our AI computing broker addresses both of these major challenges: GPU shortages and power consumption issues. This technology significantly reduces the number of GPUs required for AI processing, while also substantially lowering costs and power usage, contributing to the realization of sustainable AI.

Can you give us some examples of its applications and use cases?

Yoshifumi: The target users of the AI computing broker include AI service providers, data centers, and cloud operators. In environments where multiple AI processes are executed on GPU-based computing infrastructure, it supports the efficient operation of computing resources and reduces the number of GPUs needed for AI processing. In a trial experiment with TRADOM Inc., a provider of AI-powered currency forecasting services, applying this technology to the model development process resulted in a roughly twofold improvement in processing efficiency. Additionally, when applied to AlphaFold2, an AI that predicts the 3D structure of proteins, we achieved the same computational efficiency with half the number of GPUs.

Yoshifumi leads the overall design and development direction of the AI computing broker

Yoshifumi leads the overall design and development direction of the AI computing brokerWe've heard that the trial experiment has validated the value of this technology.

Yoshifumi: We confirmed the technology's value in different scenarios. AI processing involves two types of operations: training, which is time-consuming, and inference (prediction or judgment based on learned information), which requires rapid responses. TRADOM Inc. utilizes both types of AI processing. In the case of training, by using the AI computing broker, we confirmed the potential for optimizing the use of GPU computing resources needed for AI model generation, enabling the development of highly accurate models in a shorter timeframe. For inference, we confirmed that the technology can contribute to faster responses for AI services available on websites. When user access is concentrated, the AI computing broker maintains service responsiveness by dynamically shifting computational resources from training processes to inference processes. This allows inference operations to run smoothly even while training processes are ongoing.

Enhancing GPU efficiency through switching based on program processing

Could you explain the strengths and characteristics of the AI computing broker?

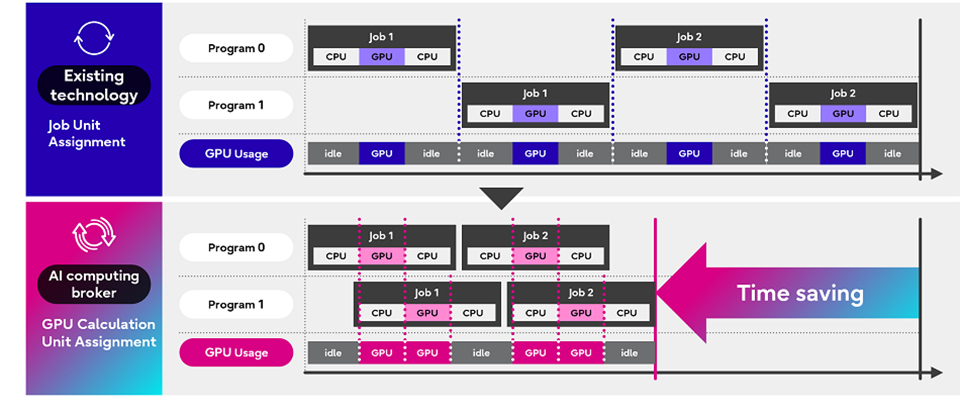

Godai: Existing technologies allocate GPUs on a per-job basis, where a job is a unit of work executed by a program. For example, Job 1 and Job 2 of Program 0, and Job 1 and Job 2 of Program 1 each use the GPU sequentially. This creates idle time for the GPU (e.g., during pre- and post-processing that don't utilize the GPU, or while waiting for input from other systems or users), resulting in low GPU utilization. In contrast, the AI computing broker allocates the resources at the GPU computation level. By assigning GPUs to Program 0 and Program 1 based on GPU availability, it improves GPU utilization and reduces overall processing time. This technology delves into the program's processing details and allocates GPUs only to the processes that actually require them, enabling significantly more efficient GPU resource allocation and maximizing GPU usage.

Strengths and characteristics of the AI computing broker

Strengths and characteristics of the AI computing brokerIn addition, this newly developed technology is designed to minimize dependencies on specific hardware or software environments. Specifically, it allows users to leverage commonly used AI frameworks like PyTorch without modification. While it's technically possible to customize an AI framework to achieve similar functionality, this approach requires users to adopt the custom version, potentially preventing them from benefiting from the latest features and updates continuously being added to the main AI framework. Our technology allows the use of standard AI frameworks as-is, enabling immediate deployment in any environment where those frameworks are already installed. This also provides the advantage of easy updates, allowing users to benefit from the latest hardware support and additional features simply by updating their AI framework.

Easy to use without code customization

What efforts have you made to enhance the usability of the AI computing broker?

Kouji: To make the AI computing broker more user-friendly, we've incorporated customer feedback to drive improvements. Having customers actually use the technology revealed unforeseen challenges and prompted us to re-evaluate our development priorities. For example, we received feedback that requiring a program restart every time the GPU allocation was changed was highly inconvenient. In response, we redesigned the system to allow GPU allocation changes without stopping the program. We also improved the interface for more intuitive operation. In addition, it became clear that using the technology initially required customers to customize their program code, which proved difficult even with the manual. To address this, we developed an auto-apply feature that allows customers to use the AI computing broker without modifying their code.

Kouji primarily handles the trial experiments, and also contributes to feature development

Kouji primarily handles the trial experiments, and also contributes to feature developmentWhat were the challenges in developing the auto-apply feature for the AI computing broker?

Akihiro: The auto-apply feature allows users to utilize the AI computing broker without modifying their program code. To achieve this, we had to overcome three main challenges. First, in order to allocate GPUs only when a program actually uses them, we needed to detect precisely when a program starts and stops using the GPU. Second, programs are typically written assuming full access to GPU memory. Therefore, when switching between programs running on the GPU, we needed to offload data from GPU memory to the CPU to free up GPU resources. Finally, in systems with multiple GPUs, the availability of GPUs changes dynamically. We had to ensure that programs function correctly regardless of which GPU is assigned.

How did you address these challenges?

Akihiro: Understanding and leveraging the workings of AI frameworks was crucial to solving these challenges. An AI framework is a software foundation that supports the development and execution of AI models. First, we monitor the AI framework's processes to detect when a program is using the GPU. Next, we manage the GPU memory used by the AI framework, offloading data from the GPU to the CPU when switching between programs and restoring it to the GPU at the appropriate time. Finally, to ensure that programs operate correctly regardless of the assigned GPU, we replace the GPU used by the AI framework with the actually allocated GPU. This effectively makes it appear to the program as if it's always using the same GPU.

Akihiro is in charge of the auto-apply feature and demo development

Akihiro is in charge of the auto-apply feature and demo developmentWe heard that you also uncovered challenges while demonstrating the technology at exhibitions.

Akihiro: To demonstrate the advantages of the AI computing broker, we created a demo that ran multiple large language models (LLMs) on the same GPU. Typically, running multiple LLMs on the same GPU is not feasible when there is insufficient GPU memory. This demo showcased the AI computing broker's ability to run multiple LLMs on the same GPU, even with limited GPU memory, by switching between AI models on the GPU.

Through this demo, we discovered a challenge: when using large AI models like LLMs, the transfer time for the models becomes significant, leading to a large switching overhead (the indirect load incurred when executing a program). Even with a superior technology, lengthy transfer times not only detract from the demo's impact but also make the technology less user-friendly for customers. We revisited the transfer methods and eliminated unnecessary transfers. Specifically, we rewrote the data transfer between the GPU and CPU to utilize hardware-accelerated methods for faster transfer speeds. In addition, since the AI model doesn't change on the GPU during LLM inference, transferring the model from the GPU to the CPU is unnecessary. Therefore, we now keep the inference AI model permanently on the CPU, transferring it only to the GPU when needed for inference processing, and omitting the transfer back from the GPU to the CPU. This approach reduced switching time approximately by a factor of ten, significantly improving the usability of the AI computing broker.

Rapid R&D through development tools and teamwork

Could you tell us about the research process itself?

Godai: Our team primarily works remotely, so we have limited face-to-face interaction in the office. However, we employ various strategies to ensure rapid development. Specifically, we maintain close communication and collaboration through daily morning meetings where we share and discuss challenges, and by using GitHub, a software development platform for managing projects and source code to track outstanding tasks. We also utilize development tools such as Continuous Integration (CI), which automates code checks to ensure quality while accelerating development speed. By automating the detection of bugs and other issues that can be identified mechanically through CI, we've reduced the review burden while improving code quality and reliability.

Godai is in charge of feature development and also handles academic conferences and exhibitions

Godai is in charge of feature development and also handles academic conferences and exhibitionsWe heard there was an interesting story about the team's R&D work.

Yoshifumi: This happened when Akihiro was in the US on a business trip for the SC24 exhibition (the world's largest international conference for supercomputing). We received an urgent request to verify the applicability of the AI computing broker to a specific inference microservice, requiring a quick turnaround. Godai and Akihiro, who was in the US at the time, were the primary people in charge. We decided to leverage the time difference. Akihiro worked on it during breaks at the exhibition in the US and shared his progress on GitHub. While it was nighttime in the US, Godai worked on it in Japan, and then Akihiro picked it up again when his day began. By diligently sharing information and utilizing the time difference, we completed the verification within a tight one-week deadline. It was an unusual situation, but thanks to their strong technical skills, teamwork, and seamless information sharing, they were able to deliver it successfully.

The development goal: Toward a generalized and easily accessible technology

What are your future plans for the AI computing broker?

Kouji: To broaden the reach of the AI computing broker, we need to expand support for more hardware. We are also exploring a no-code version compatible with various AI development frameworks, aiming to lower the barrier to entry and increase accessibility for a wider audience.

Akihiro: I plan to expand the scope of the auto-apply feature I developed and further reduce the overhead demands. My goal is to make the AI computing broker a more general-purpose technology that's easy for anyone to use.

Godai: Currently, I'm working on expanding and stabilizing the functionality of the AI computing broker to operate across multiple servers. This involves coordinating multiple physical servers, which presents numerous technical challenges, such as increased communication complexity. It's a very demanding task, but tackling these difficult challenges contributes to my personal growth and provides a strong sense of accomplishment.

Yoshifumi: Our aim is to make the technology more versatile. Specifically, we want to expand beyond the current support for PyTorch and TensorFlow to encompass a wider range of AI frameworks. We are also considering further enhancements to accelerate and optimize AI processing as a whole. Going forward, we want to offer this technology to more GPU resource providers, such as cloud service providers, and contribute to enhancing our customers' business productivity through AI.

How can I find out more about the AI computing broker?

Kouji: To learn more about AI computing broker, please watch our demo video. If you're interested in participating in a trial experiment, please don't hesitate to contact us. For those who would like to explore other advanced technologies from Fujitsu, please visit the Fujitsu Research Portal.

Fujitsu's Commitment to the Sustainable Development Goals (SDGs)

The Sustainable Development Goals (SDGs) adopted by the United Nations in 2015 represent a set of common goals to be achieved worldwide by 2030. Fujitsu's purpose — “to make the world more sustainable by building trust in society through innovation”—is a promise to contribute to the vision of a better future empowered by the SDGs.