A passion for knowledge, building a future based on computation innovation

Anh NguyenComputing Laboratory

A desire for exploring the unknown

As a child, I was endlessly curious, constantly asking questions—too many, according to my parents. I'd ask things like, "Why is the sky blue?" and "Why do giraffes have long necks?". I loved science, especially physics, because it explained natural phenomena around us and taught me to think logically. That love of asking "why" continued into university, but I began to realize how much remained unanswered, and just how many mysteries were still waiting to be solved.

At university, my department offered many interesting courses on computer architecture and communication systems, but the digital design course truly shaped my direction. I discovered a passion for research during a term-long project focused on computer vision for traffic cameras, during which I surveyed papers, experimented with different algorithms, and evaluated their performance. We got a conference paper out of it, which was a great learning experience. I loved the daily challenge of learning something new or solving a problem independently. That's when I knew I wanted to pursue further study and a career in research.

Meeting the demand: creating high-performance AI accelerators

Fujitsu and RIKEN are well-known for its supercomputers, including the K computer and its successor, Fugaku, which achieved the rank of the world's fastest supercomputer. As I am interested in conducting research on computer architecture, especially designing domain-specific accelerators for computer systems, joining Fujitsu Research to achieve high performance in computing is my ideal career path.

Since joining the company, I've taken on the challenge of developing AI accelerators that break the mold in performance. With the steady growth of AI and HPC applications, the demand for effective computing platforms to run such applications has certainly been growing too. If you consider that recent AI models' computing demand is reported to scale up by 5-10 times yearly, balance this with the fact that the computing capability of general-purpose processors can hardly double per year. This difference in scale reveals that using only general-purpose processors makes it far more difficult to keep up with the ever-growing pace of AI. Therefore, specialized domain-specific accelerators such as those designed for AI are needed. These accelerators achieve high performance by focusing on target domains like machine learning, robotics, image recognition and more.

Focus on developing reconfigurable hardware accelerators for AI applications

My project focuses on developing a dataflow reconfigurable hardware accelerator for AI applications to address this growing need. It adapts the hardware architecture to some specific tasks, resulting in higher processing speeds. One of my foremost tasks has been exploring the architecture of this accelerator. This has involved studying which types of AI processing would be most suitable for acceleration using dataflow architecture and validating the architecture's effectiveness for those processes. Next, using a baseline architecture, we have explored how to execute multiple processing tasks efficiently on the same hardware.

This has led me to delve deeper into mapping techniques (assigning different operations of an application to specific hardware components in the computing system, with the goal of optimizing performance, resource utilization, and power consumption) — specifically, how to map applications, represented as dataflow graphs, onto the hardware architecture, represented as a device model graph. This is a challenging yet fascinating task, as mapping is a notoriously complex problem in Computer Aided Design.

Overcoming mapping challenges for the new architecture

Initially, I found this task quite daunting. Mapping was a new area for me, and the potential for the architecture to change based on evolving target processing requirements added to the complexity. The mapper needed to be flexible enough to handle both evolving architectures and diverse applications. However, through analysis of the target applications and discussions with team members and collaborators, I identified several invariant conditions. By incorporating these conditions into the mapping framework, I provided stability despite potential architectural changes. Later, when our team finalized the architecture, the framework proved robust and adaptable to the updated design.

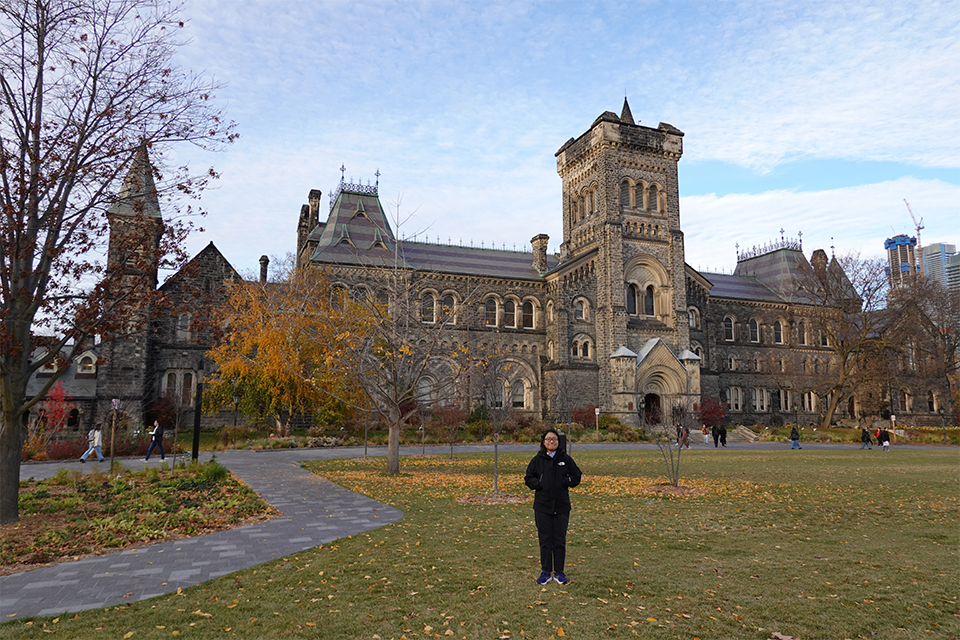

To accomplish this, I've been collaborating with a research group from the University of Toronto, where I was stationed to utilize their CGRA-ME framework (*1) to model and explore our proposed architecture. I've successfully adapted their framework to address the specific constraints of our architecture and improve mapping performance. This almost two-year collaboration has been productive, resulting in several publications at international conferences.

Research life in University of Toronto

My typical day starts a bit later than most. After brunch, I head to the lab on the University of Toronto campus, where I meet with colleagues and collaborating professors. We often brainstorm and discuss ideas using a whiteboard. Sometimes, I have an evening or night video call with my team in Japan to exchange updates and discuss my research findings.

I enjoy nature and frequently explore local parks or go hiking. Whether it's a stroll through a nearby park or around the beautiful campus, these walks often spark new ideas for my work, allowing me to focus deeply on current challenges. After work, I often unwind by watching movies, especially science fiction.

Accelerating the future of computation

My short-term goal is to develop a highly efficient AI accelerator for our company's next-generation supercomputer, targeting a 10-100x performance gain over conventional processors like GPUs/CPUs. Longer term, I aim to become an expert in computer architecture, specializing in reconfigurable dataflow architectures—a compelling alternative to today's power-hungry GPUs. Inspired by the futuristic visions of science fiction, my research aims to develop architectures that dramatically accelerate computation for critical real-world applications like autonomous driving and surveillance systems, paving the way for the advanced interfaces we are accustomed to seeing imagined on screen.

Messages from colleagues

Anh Nguyen is a dedicated young researcher. She is currently stationed in Toronto and collaborating with the University of Toronto. Her motto, “Enjoy what I do, do what I enjoy,” reflects her overall attitude towards life. She thrives in open discussions with her professors, students, and colleagues. She pushes her research forward daily. She isn’t afraid to voice her dislikes. Her unique perspective makes her choices, like selecting menus at restaurants, highly insightful. She balances her professional and personal life with a focus on what truly matters to her. (Atsushi Ike, Fujitsu Consulting (Canada))

-

(*1)CGRA-ME is an open-source tool built at the University of Toronto for Modelling and Exploration of Coarse-Grained Reconfigurable Architectures.

Titles, numerical values, and proper nouns in this document are those reported when this interview was made.