Developed an AI technology that accurately and instantly estimates quality of experience from encrypted packet data for high-quality and efficient distribution of video streaming

October 5, 2023

Japanese

High-resolution video content, such as full high-definition and 4K, is rapidly gaining in popularity, especially on video streaming services like YouTube. Today, video streaming accounts for 70% of the data transmitted through wireless mobile networks, with the numbers only expected to grow ever higher in the future.

Fujitsu has developed an AI technology called “Realtime Quality of Experience Sensing,” which accurately and instantly estimates users’ perception of the quality of experience (QoE) when watching videos. By using this technology to estimate QoE and employ it as an operational metric for wireless networks, appropriate wireless resources can be allocated to achieve a level of quality that satisfies users. This, as a result, enables high-quality and efficient distribution of large volumes of video streaming.

The technology will be showcased at FYUZ![]() , an annual networking technology event in Madrid, Spain, taking place from October 9, - October 11, 2023.

, an annual networking technology event in Madrid, Spain, taking place from October 9, - October 11, 2023.

5G era network operation

To distribute large volumes of high-quality video streams over a mobile network, it is essential to allocate radio resources appropriately. Traditionally, mobile network operators have expanded their infrastructure, such as base stations, to meet network quality of service (QoS) requirements, including communication speed. To distribute the ever-growing volumes of video stream without delay, we will need to install even more base stations and operate them efficiently.

On the other hand, QoE, which represents user satisfaction with service usage, is gaining attention as a new metric of network operation. By estimating QoE in real time and using it as a metric to ensure network performance, network quality can be maintained without the need for excessive numbers of base stations.

Challenges in estimating QoE

One method for evaluating media quality, such as video, is the Mean Opinion Score (MOS). This is a subjective assessment of QoE. The relationship between this value and the user satisfaction with service quality has been defined experimentally, making it the best metric to determine whether users are satisfied with the service quality or not.

MOS is calculated through user-based subjective quality evaluation testing. More recently, it has become accepted that MOS can be estimated using the international standard ITU-T P.1203, which evaluates video and audio quality. This standard acquires objective media information from video applications, such as the video encoding bit rate, which indicates the amount of data per second. However, this method cannot be applied to applications that lack an interface for obtaining media quality information. Additionally, MOS can only be estimated in units of about 10 seconds, which is the length of segmented video. As a result, ITU-T P. 1203 is difficult to apply to fine-grained network operations that need to track quality changes of various video services in a timely manner.

Development of “Realtime Quality of Experience Sensing”

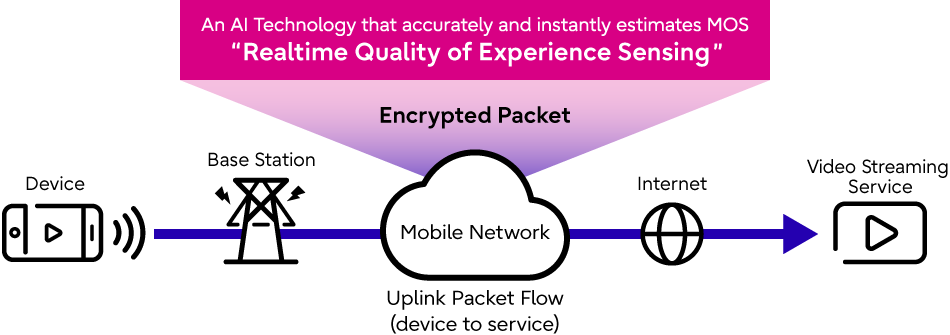

At Fujitsu, we have developed Realtime Quality of Experience Sensing, an AI technology that accurately and instantly estimates MOS from encrypted uplink packets flowing from a device to a video streaming service. This technology eliminates the need to obtain media quality information from applications. Since MOS can be estimated every second, it can be applied to fine-grained network operations.

Figure 1. Overview of “Realtime Quality of Experience Sensing”

Figure 1. Overview of “Realtime Quality of Experience Sensing”

The key features of this technology are outlined below.

・Architecture to support a variety of services

Many video streaming services currently use HTTP Live Streaming (HLS) and Dynamic Adaptive Streaming over HTTP (MPEG-DASH) as communication protocols. However, some services may be individually tuned or transition to a new protocol.

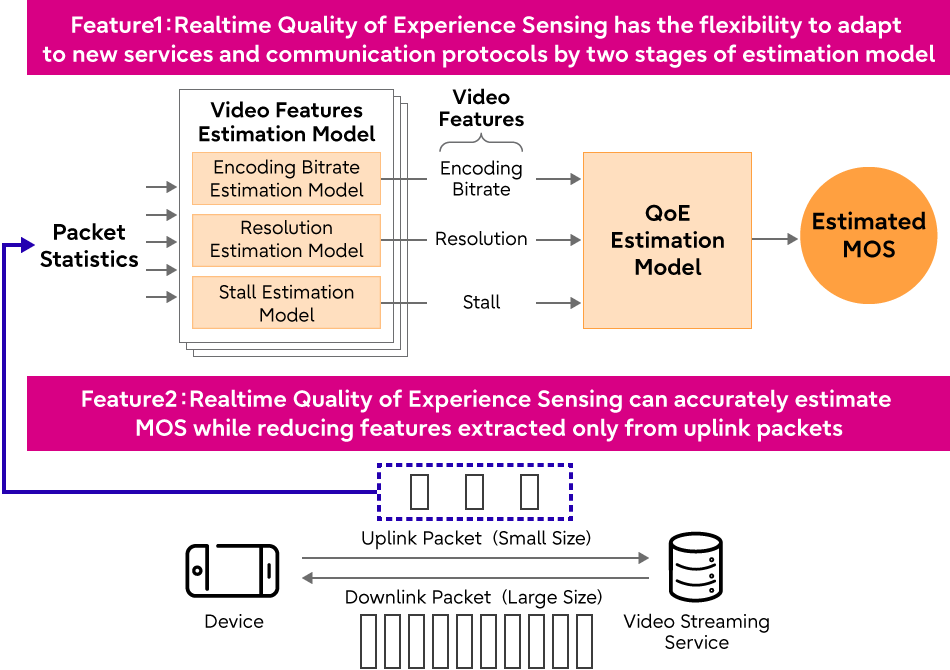

To accommodate this diversity and change in services, we adopted an estimation architecture consisting of two stages: a video feature estimation model that outputs common video features for MOS estimation, such as encoding bit rate, resolution, stall (whether the video playback stops or not), and a QoE estimation model that outputs MOS from the common video features.

The video feature estimation model takes encrypted packet statistics as input features and outputs general video feature values independent of services and communication protocols. The subsequent QoE estimation model takes these video features as input, allowing it to be independent of service and communication protocols. This provides “Realtime Quality of Experience Sensing” with the flexibility to adapt to new services and communication protocols by relearning or modifying only the video feature estimation model as needed.

・High-precision and real-time video feature estimation model

Generally, video applications dynamically adjust the quality of the acquired video to prevent playback interruptions, depending on the network status and the amount of video data stored in the playback buffer. To address such temporal variation of the quality, we used a neural network model called LSTM (Long Short-Term Memory), which can handle time series data dependencies, considering that video features are affected by the temporal change of packets flowing through the network.

The number of packets flowing during video playback is immense. To reduce the complexity of extracting features from them, it is necessary to focus on fewer packets. When the amount of free space in the video application’s playback buffer increases, the status of the buffer and the network can be expressed by the video request packet to be sent and the video data response packet received when the video data is actually received. Based on this concept, in this model, statistics such as the number of packets in every 0.5 seconds time slots are collected as a feature vector only from for uplink packets.

By focusing on the features of uplink packets, we were able to estimate the video features with nearly the same accuracy, while reducing the amount of processed data by 98% compared to using all (uplink and downlink) packets. The developed “Realtime Quality of Experience Sensing” was evaluated using HLS and MPEG-DASH. It was able to estimate an equivalent MOS for over 85% accuracy to the estimated value using ITU-T P. 1203 calculated with video quality information obtained from video applications.

Figure 2. MOS Estimation Architecture for “Realtime Quality of Experience Sensing”

Figure 2. MOS Estimation Architecture for “Realtime Quality of Experience Sensing”

Comment from developers

Artificial Intelligence Laboratory Development Team Member

Junki Oura

Akihiro Wada

Teruhisa Ninomiya

Kaoru Yokoo

Jun Kakuta

Masatoshi Ogawa

We are striving to develop technology that estimates user QoE with the goal of creating a network that enables enjoyable use of high-resolution video streaming services. By playing hundreds of hours of video in various network environments and repeatedly collecting and analyzing data, we have successfully established the “Realtime Quality of Experience Sensing” technology. We will continue to expand into next-generation services such as virtual reality, augmented reality, and mixed reality, making it possible to use advanced services that incorporate video ever more agreeable and comfortably.

Future plan

To verify the effectiveness of this technology in real-world environments, we plan to demonstrate it through proof of concept (PoC) applications with customers, including mobile network operators, and aim for practical implementation. Additionally, we will develop optimal control technologies for network resources and work towards enriching people’s lives and industrial development through the efficient provision of high-quality networks.

Related links

・Advanced Management of Radio Access Networks using AI (White Paper)