Fujitsu’s Actlyzer Digital Twin technology transforms worker safety and efficiency in manufacturing

June 07, 2023

Japanese Automatic camera calibration technology maps field images into 3D space

Automatic camera calibration technology maps field images into 3D space

The digital twin is an important advance that is attracting considerable attention in the manufacturing industry. Digital twin is a technology that collects data from the real world using cameras and sensors, reproduces the real world in a virtual space, and carries out high-precision simulations to improve quality, reduce costs, and realize predictive maintenance. Importantly, it holds considerable potential for improving worker safety.

The digital twin simulator needs to be fed with information about people’s movement of people and the operation of objects in the real world, including, the three-dimensional positional relationship between people and the surrounding environment such as equipment.

Mapping the three-dimensional information is a time-consuming and expensive exercise, as it has to be set up in advance.

Our company is conducting R & D on an AI called "Actlyzer," which recognizes human action from on-site camera images. Some of the core technologies have already been commercialized as Fujitsu Cognitive Service GREENAGE.

We have made some important advances to develop our technology further, making it easy to introduce digital twins in the field, utilizing existing cameras or by incorporating them into a large scale network. Within our new Actlyzer Digital Twin Collaboration Technology, we have developed automatic camera calibration technology and 3D action recognition technology that can easily and accurately map human action in images to a 3D space reproduced on a simulator.

Challenges to overcome

In order to capture a worker's movements and actions from an image and reproduce them in the virtual space, it is necessary to associate the two-dimensional coordinates in the image with the three-dimensional coordinates in the real world and feed them back to the simulator. This mapping depends on camera parameters, such as the lens distortion and focal length of the camera that captured the image, as well as the position and orientation of the camera. This puts a major emphasis on the correct camera calibration being applied to detect the actual camera parameters.

In a typical camera calibration, a board printed with a checker pattern of a given size is taken at various positions, orientations and distances to verify accuracy manually. This means that detecting camera parameters with high accuracy requires a lot of effort, knowledge and experience.

A novel approach to digital twin technology

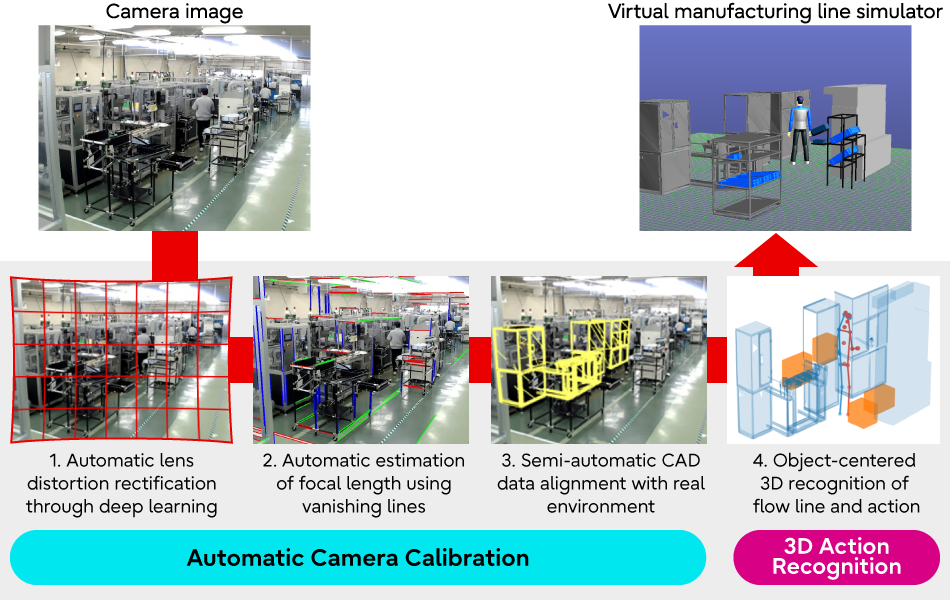

To overcome these limitations and challenges, our newly-developed Actlyzer Digital Twin Collaboration Technology performs semi-automatic camera calibration using a single image frame from a camera installed on the site, determining a 3D perspective of human action from this camera image.

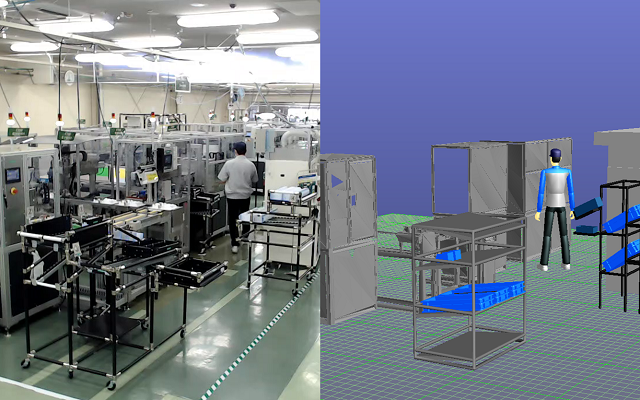

Thanks to this technology, we can easily and accurately realize a digital twin that feeds back human actions on a virtual line simulator (Figure 1).

The technology initially estimates lens distortion parameters from images based on deep learning, and then generates images without any distortion (Figure1-1) .

It then detects the lines that exist on the floor, walls, ceiling, or object in the image and estimates which lines are parallel in the real world. In Figure1-2, the lines that are determined as being parallel are colored red, blue, and green. The focal length is then estimated from the vanishing points that extend the segments and eventually meet in the depth direction. The technology also uses deep learning to determine the height and angle of the both horizon and camera.

Finally, the user specifies the image coordinates corresponding to the points of the CAD data on the virtual line simulator, using a mouse, and associates the actual image coordinates with the three-dimensional coordinates on the simulator (Figure1-3).

This technology allows you to measure camera parameters rapidly without needing any special skills or experience. By using real video data from a factory, we have been able to verify the accuracy of this technology, demonstrating that it can accurately map points in the real world at a distance of approximately 6m from the camera and approximately 2m from the reference point aligned with the CAD data, with an error margin of 2cm.

Figure 1 Actlyzer Digital Twin Collaboration Technology

Figure 1 Actlyzer Digital Twin Collaboration Technology

We have also developed a 3D action recognition technology that uses camera calibration results to recognize action in 3D from a single camera and to relate that action to a specific location or device in the field. The following video shows the actual recognition results.

3D Action Recognition

Using this technology, we can verify the viability of a specific location and equipment by defining them in advance on the simulator, as well as identifying any potentially dangerous positions and feeding these into the simulator.

Comments from the team

Development Team Members from the Advanced Converging Technologies Laboratories.

Atsunori Moteki

Yukio Hirai

Minoru Sekiguchi

Genta Suzuki

Development Team Members from the Advanced Fujitsu R&D Center Co., Ltd.(FRDC)

Tan, Zhiming

Kang, Hao

Song, Xu

Xia, Conghe

Development Team Members from the Advanced Fujitsu Consulting India Private Limited(FCIPL)

Amitkumar Shrivastava

Juby Joseph

Amit Goel

Murugan Yuvaraaj

Nilesh Jadhav

Ajinkya Phulke

Sharmistha Kulkarni

Shyam Kadam

Chandranila Das

We are working on developing human sensing technology based on AI image analysis and context sensing technology, in order to observe actions and actions to promote the DX process in the field, particularly in areas such as manufacturing and retail.

Our technology offers a number of important advantages, including ease of introduction in to the field and highly accurate recognition of workers' actions. We will continue to pursue R & D to build a foundation for industry and technological innovation by making full use of cutting-edge technologies and collaborating with many global customers.

Looking Ahead

This technology and our associated virtual line simulator will be demonstrated for practical use during fiscal 2023.