Adding the Element of Vision to Natural Language Processing

July 6, 2021

JapaneseAs human beings, we perform highly complex and intelligent information processing in order to understand our world and the things in it. This relies on our ability to combine a variety of inputs and combine them into a single comprehensive result.

Artificial Intelligence (AI) research, on the other hand tends to use a single information source (unimodal information), which in turn limits its potential. However, recent progress in AI technology development is enabling researchers to change this, successfully combining a variety of information such as texts and images (multimodal information).

As part of our research focus on multimodal information processing, Fujitsu Research recently took part in the latest SHINRA2020-ML ![]() task in collaboration with the University of Melbourne, involving the classification of multilingual Wikipedia articles. Established in 2017, SHINRA is a resource creation project that aims to structure the knowledge in Wikipedia.

task in collaboration with the University of Melbourne, involving the classification of multilingual Wikipedia articles. Established in 2017, SHINRA is a resource creation project that aims to structure the knowledge in Wikipedia.

We developed a system using multimodal information and achieved the top-ranked performance in four different languages. We also examined in detail the effect of combining textual and visual information on the SHINRA2020-ML. Our results were presented at EACL2021, one of the world’s top conferences concerning natural language processing.

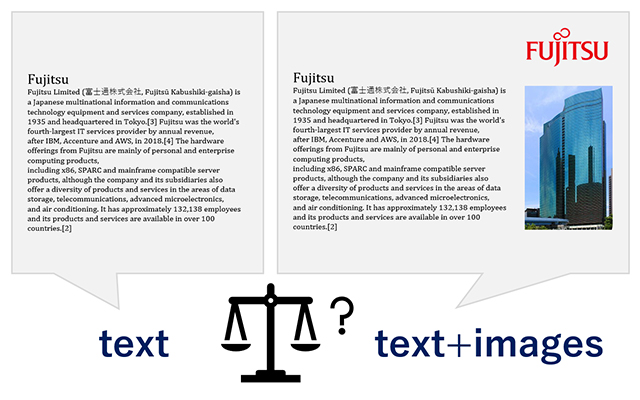

Text and drawings are excerpted from wikipedia

https://en.wikipedia.org/wiki/Fujitsu

How can we utilize multimodal information?

In the field of natural language processing, it has been demonstrated that language models trained on a huge amount of text data have the ability to solve a wide cross-section of language understanding tasks, achieving high performance results ([1] [2]). Nevertheless, these types of language models may well fail on tasks that are very easy for human beings. For example, it was reported that GPT-3 [2], one of the super-large language models, could not answer the following question: “If I put cheese into the fridge, will it melt?” In order to answer this question, one approach may involve training the model with a variety of texts, including information such as the temperature inside a fridge, the temperature at which cheese melts, etc. However, it is not viable either in terms of cost or scope to collect texts about everything in the world that might be required to train a language model. Human beings, on the other hand, acquire knowledge not only from texts but also other information sources such as images and sound. For example, even if we have no written information about a fridge, such as the color, shape, functionality, temperature, and so on, we know what we need to know just by seeing the fridge. Similarly, including images of fridges might represent one way of complementing missing knowledge in a language model. Our aim is to enable more effective knowledge acquisition through our multimodal information processing research, and achieve ever more advanced problem-solving in the future.

We are already seeing a considerable body of research using multimodal information especially for text- and visual-based information. Many unexplained elements remain, however, such as each type of information’s role and interaction, and the mechanism relating to each model etc. On the SHINRA2020-ML task, we took the opportunity to clarify how multimodal information contributes to the overall performance of a model. Wikipedia represents a very good starting point, thanks to the wealth and scope of information about Wikipedia articles, including not only text and images, but also categories, knowledge graphs, and so on.

Giving Natural Language Processing Engines their own “eyes”

Our model uses four types of information drawn from the various Wikipedia data available: textual information, image information, page-layout information, and knowledge-graph information based on Wikidata ![]() . First, we obtain four different representations of a document by learning four different models, using four types of information separately. Then, we train a model to integrate these representations and obtain the final output.

. First, we obtain four different representations of a document by learning four different models, using four types of information separately. Then, we train a model to integrate these representations and obtain the final output.

For the SHINRA2020-ML ![]() multilingual Wikipedia classification competition, our model outperformed other text-based models in 4 out of 28 language subtasks (English: 1st place out of 4 teams, Spanish: 1st place out of 5 teams, Italian: 1st place out of 5 teams, Catalan: 1st place out of 3 teams). These results indicate how different types of information contributed to improving the overall accuracy. By integrating “eyes” (i.e., image information) into the natural language processing engine, the model indeed learned something new that contributed to accuracy.

multilingual Wikipedia classification competition, our model outperformed other text-based models in 4 out of 28 language subtasks (English: 1st place out of 4 teams, Spanish: 1st place out of 5 teams, Italian: 1st place out of 5 teams, Catalan: 1st place out of 3 teams). These results indicate how different types of information contributed to improving the overall accuracy. By integrating “eyes” (i.e., image information) into the natural language processing engine, the model indeed learned something new that contributed to accuracy.

Next, we examined the contribution of textual information and image information in detail. The experimental results on 5 different languages clearly showed that the effectiveness of images depends on a neural-based language pre-trained model, using a large-scale text dataset. Without the neural-based language model, images did improve the performance. However, if the neural-based language model was utilized, images became less effective.

Our findings were recently published at the EACL 2021, a top-level international natural language processing conference, gaining recognition for their meaningful insights into multi-modal processing research.

Recognition on the World Stage

We have published two papers relating to this research:

- At NTCIR-15 introducing our research on the task of SHINRA2020-ML,

- At EACL 2021 discussing the effectiveness of images for text classification.

We plan to continue our research, focusing next on how to improve the quality of document representations with various multimodal information.

The 15th NTCIR Conference on Evaluation of Information Access Technologies ![]()

(Dec. 8th, 2020 ~ Dec. 11th, 2020)

・Title :UOM-FJ at the NTCIR-15 SHINRA2020-ML Task

・Authors :Hiyori Yoshikawa (Fujitsu Lab.), Chunpeng Ma (Fujitsu Lab.), Aili Shen (UniMelb), Qian Sun (UniMelb), Chenbang Huang (UniMelb), Guillaume Pelat (École Polytechnique), Akiva Miura (Fujitsu Lab.), Daniel Beck (UniMelb), Timothy Baldwin (UniMelb), Tomoya Iwakura (Fujitsu Lab.)

The 16th Conference of the European Chapter of the Association for Computational Linguistics ![]()

(Apr. 19th, 2021 ~ Apr. 23rd, 2021)

・Title:On the (In)Effectiveness of Images for Text Classification

・Authors:Chunpeng Ma (Fujitsu), Aili Shen (UniMelb), Hiyori Yoshikawa (Fujitsu), Tomoya Iwakura (Fujitsu), Daniel Beck (UniMelb), Timothy Baldwin (UniMelb)

References

[1] Devlin et al.: BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding ![]() . In NAACL, pp.4171-4186, 2019.

. In NAACL, pp.4171-4186, 2019.

[2] Brown et al.: Language Models are Few-Shot Learners. arXiv preprint arXiv:2005.14165, 2020.

For more information on this topic:

fj-shinra2020@dl.jp.fujitsu.com

Please note that we would like to ask the people who reside in EEA (European Economic Area) to contact us at the following address.

Ask Fujitsu

Tel: +44-12-3579-7711

http://www.fujitsu.com/uk/contact/index.html![]()

Fujitsu, London Office

Address :22 Baker Street

London United Kingdom

W1U 3BW