Promoting Trustworthy AI –new risk comprehension technology accelerates risk assessment from days to just 2 hours!

April 12, 2023

Japanese

AI is increasingly being used in various fields such as healthcare, finance, and manufacturing. There are, however, concerns that AI and the data used to train it may be making unfair judgments due to bias in terms of gender and ethnicity. In order to promote trustworthy AI, the European Union is working towards enacting the AI Act in the coming years.

At Fujitsu, we are looking at new ways of improving our understanding of AI decision-making. In this case, we have used articles reporting troubles (incidents) caused by AI as the basis for developing our new AI Ethics Risk Comprehension technology. This technology helps to interpret generic ethics requirements into exact events under a particular AI use case and to uncover the mechanism in which an incident eventually occurs. This technology has been incorporated into Fujitsu’s AI Ethics Impact Assessment method and made into a toolkit, as part of our ambition to offer it for wider AI risk assessment applications including conformity assessment as set out in the draft AI Act.

The breakthrough with this technology is that we can now automate risk assessment, a previously highly labor-intensive task, and reduce the time involved for conducting risk assessment from days to just two hours.

AI ethics, background and challenges

Since 2019 when the Ethics Guidelines for Trustworthy AI were published by the European Union’s AI High Level Expert Group and OECD Principles on Artificial Intelligence, a number of organizations and companies have published their own guidelines on AI Ethics. These documents define requirements that AI must meet during the design, development, and operational phases in order to comply with the values and norms that it must share with society.

The AI Act, which was drafted in April 2021, will impose hefty fines and penalties on service providers for violations such as failing to audit their services properly when they apply AI to prohibited or high-risk applications. This applies to services in Europe even if they are hosted by other regions, and so companies based outside the European region are required to be compliant with this legislation.

Based on these trends, leading companies and organizations have begun to employ so-called checklists when they study compliance with ethical principles and development guidelines. However, what if you have no clear evidence that such checklists are either necessary or sufficient in terms of the requirements stated in the principles and development guidelines? This is sometimes the case, despite the fact that conformity assessment is becoming increasingly crucial as the AI Act becomes law.

As these checklists are intended to be applied to various AI use cases, risk assessment workers have to consider how each check item relates to a particular AI use case. This makes it difficult to evaluate risks and apply swift and appropriate countermeasures, holding us back from achieving the social implementation of trustworthy AI.

AI Ethics Risk Comprehension technology

As already explained above, the fact that various stakeholders have marked items against a checklist does not provide clear evidence that the relevant ethical requirements have been considered. To overcome this challenge, we made the Fujitsu AI Ethics Impact Assessment method publicly available in 2022, on the basis of assisting the process of AI ethical risk analysis.

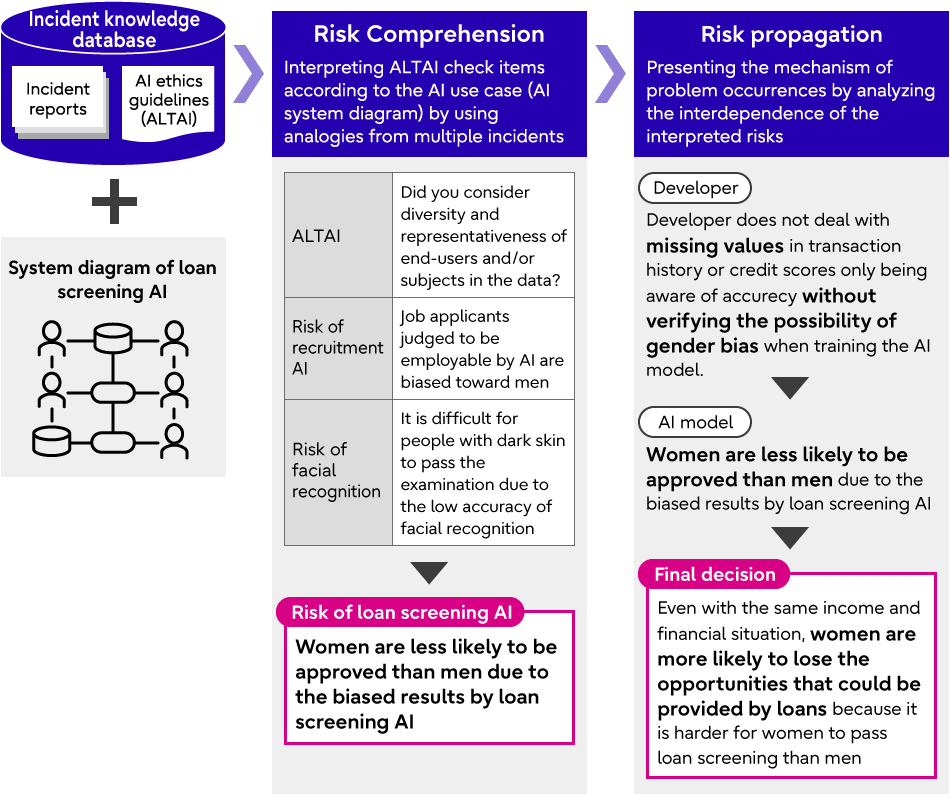

As the next step, we are now unveiling our AI Ethics Risk Comprehension technology that supports the rapid consideration of countermeasures. By making use of articles issued by various media that report problems caused by AI (incidents), it interprets what the sentences in the checklist mean for the particular AI use case under discussion, and then helps understand the mechanism in which incidental events could be materialized.

The key points of this technology are as follows.

Risk comprehension

We have built an incident knowledge base that associates thousands of sentences or paragraphs in incident reports with one or more of the 150 check items. Using this approach, the technology provides risk assessors with tips for translating the descriptions of check items into situations in which risks are exposed. It gives them prompts in concrete terms such as stakeholders and their operations, AI models and their evaluation indicators, as well as data and their processing procedures.

Taking loan screening AI as an example use case, let’s consider a check item stating "Did you consider diversity and representativeness of end-users and/or subjects in the data?". The technology suggests interpreting “end users and examinees as “recipients” and “diversity and representativeness ” as "difference between men and women, bias toward loans to men".

Risk propagation analysis

It is difficult to take measures to combat risks simply based on the knowledge of an interpreted view of how they have been exposed. So by using the incident knowledge base again, the technology analyzes how they are propagated through stakeholder interactions.

Taking our loan screening AI example again, propagation analysis focusing on the risks associated with fairness, which is one of requirements of trustworthy AI, can discover the dependencies between the risks involved in AI developers, AI models, and the final decision of loan officers.

This enables us to identify the factors that cause each unethical event in a narrative way, by presenting risk assessors with the mechanism on how those factors evolve until they could activate the unethical event during the interactions between stakeholders.

Risk comprehension process flow in loan screening AI

Risk comprehension process flow in loan screening AI

Human resource matching AI use case

In order to verify the value of this technology, we applied the AI Ethics Risk Comprehension technology to "Shigotoba Kurashiba Iwate *" a website that serves both job seekers and recruiting companies in Iwate Prefecture to promote U-turn or I-turn. The AI Ethics Impact Assessment method demonstrated its ability to identify 150 potentially unethical events lurking in the use case, each of which is given a narrative of how it could be activated. By examining risks within a particular use case, all measures discussed at Job Café Iwate* were justified as trustworthy, because of the factors that having yielded past incidents.

- *Shigotoba Kurashiba Iwate: a service that Iwate prefecture outsourced to Fujitsu Japan for development, maintenance and operation

- *Job Cafe Iwate: A labor support facility in Iwate prefecture, managed and operated by Fujitsu Japan

We then went on to make the AI Ethics Risk Comprehension technology into a tool and provided it to the ethical risk assessment team at our company. They were able to demonstrate its effectiveness, significantly reducing the risk assessment work required from several days to just two hours. This provided clear proof of just how cost-effective the toolkit implementing AI Ethics Risk Comprehension technology can be. It ensures compliance with AI ethics guidelines by transforming generic check items covering unseen applications into a narrative that clearly demonstrates how things can evolve to the point where they activate risks.

Developer Comments

AI Trust Research Center Development Team Member

Satoko Shiga

Kyoko Ohashi

Akira Sato

Izumi Nitta

Sachiko Onodera

In our project, we are conducting research on AI ethical risk management with the aim of promoting trustworthy AI. We will continue to contribute to the development and dissemination of technologies that enable AI developers to develop AI systems with confidence, as well as enabling customers to use AI systems with complete peace of mind, rather than being concerned about ethical risks and hesitating to use AI as a result.

Future Vision

We will continue expand this method’s AI application scope and strengthen our response to AI ethical risks. We also want to work towards helping create a new generation of trusted AI, making our new toolkit and the knowledge base it contains widely available to customers and partners. Our objective is to encourage its adoption as one way of certifying compliance with the AI Act as it comes into force in the near future.

Related links

・Fujitsu delivers new resource toolkit to offer guidance on ethical impact of AI systems : Fujitsu Global (February 21, 2022 press release)

For more information on this topic

AI Trust Research Center, Fujitsu Research

fj-labs-aie-info@dl.jp.fujitsu.com