NVIDIA DGX Station A100

The universal system for AI Infrastructure

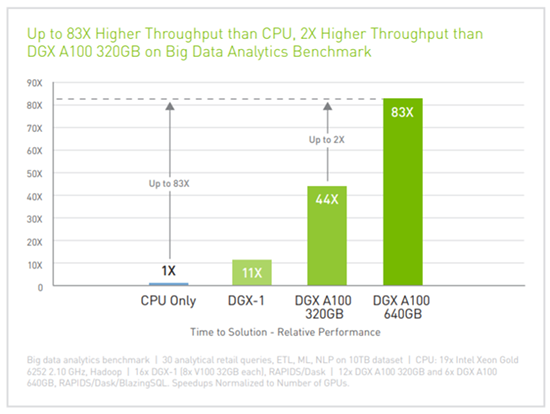

NVIDIA DGX™ A100 is the universal system for all AI workloads, offering unprecedented compute density, performance, and flexibility. NVIDIA DGX A100 features the world’s most advanced accelerator, the NVIDIA A100 Tensor Core GPU, enabling enterprises to consolidate training, inference, and analytics into a unified, easy-to-deploy AI infrastructure that includes direct access to NVIDIA AI experts.

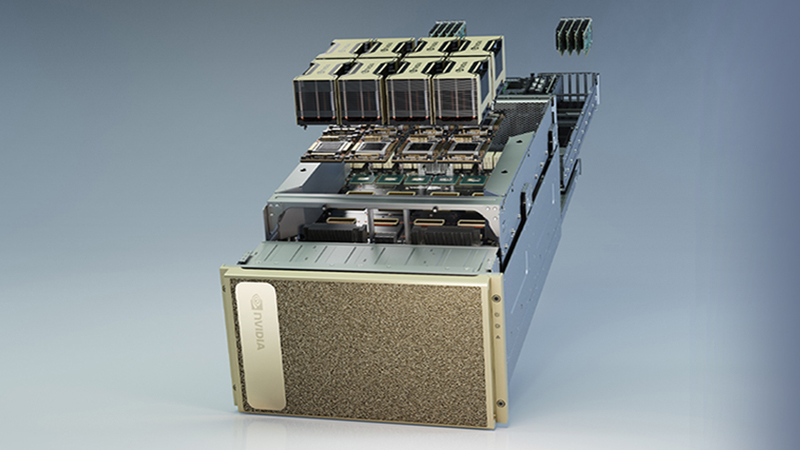

Now in it’s third generation, the NVIDIA DGX A100 offers even more performance and capabilities with the new A100 GPU’s. The 6U form factor is packed with power and complete with 8 x NVIDIA A100 Tensor Code GPU cards and 6 x NVLink switches. The base model packs a total or 320GB and 15TB of internal NVME storage. If you require more storage the 640GB model also provides double the internal NVME storage of the base model with a whopping 30TB. Computing power can be finely allocated using the Multi-Instance GPU (MIG) capability in the NVIDIA A100 Tensor Core GPU. This enables administrators to assign resources that are right-sized for specific workloads.

The system comes complete with the DGX software stack and optimized software from NGC, the combination of dense compute power and complete workload flexibility make DGX A100 an ideal choice for both single node and large scale deployments.

Get in touch

Speak to one of our AI experts for more information.

Thank you. We have received your request and will get back to you shortly.