How can we rebuild trust in the digital world?

An interactive discussion with Professor Michael Sandel

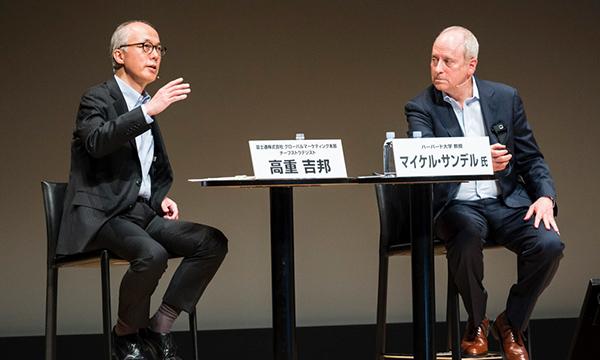

“If you could speak to the ‘digital twin’ of a deceased family member, would you want to do so?” With provocative questions like this, Professor Michael Sandel led a frank and wide-ranging discussion that struck at the heart of many of the problems we face in today’s digital world. Speaking at the Fujitsu Forum 2019 Frontline session on 16th May, Professor Sandel was joined by Yoshikuni Takashige, Fujitsu’s Chief Strategist for Global Marketing and eight other panelists in a lively discussion of ethical challenges related to personal data and AI, as well as the responsibility of corporations in society.

The eight panelists included Yuko Yasuda, Public Affairs Specialist at the United Nations Development Programme (UNDP) Representative Office in Tokyo; Edmund Cheong, deputy director of the Rehabilitation Center of the Malaysian Social Security Organization; Hazumu Yamazaki, co-founder and CSO of the speech and emotion analysis startup company Empath; and Yasuhiro Sasaki, a director and business designer at the design innovation company Takram. Also on the panel from Fujitsu were Yumiko Kajiwara, Corporate Executive Officer, being in charge of industry-academia-government collaboration and diversity; Ian Bradbury, CTO of Financial Services in Fujitsu UK&I; Sebastian Mathews, Process Manager at the Global Delivery Center in Fujitsu Poland; and Mika Takahashi of Global Marketing Unit.

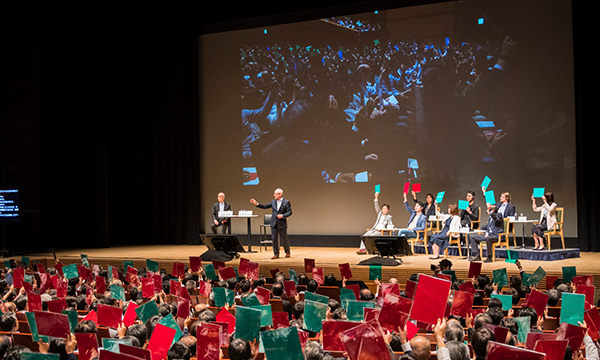

Audience members attending the discussion at the Tokyo International Forum were also encouraged to participate, and were provided with red and green cards that they could hold up to vote yes or no on various questions that Professor Sandel asked.

Yoshikuni Takashige

Hello everyone. Today we would like to discuss how we should rebuild ‘trust’ in the digital world with Professor Michael Sandel, who is well-known for his lectures at Harvard University.

Digital technologies such as the internet and smartphones have made our everyday lives more convenient, and have enabled us to generate new businesses like e-commerce and social networks. But on the other hand, don’t you feel something is wrong? The leakage of Facebook users’ personal data last year is one example that comes readily to mind for many of us. In Fujitsu’s global survey, more than 70% of respondents were concerned about the trustworthiness of online data and the risk of their privacy being compromised. People are losing confidence in digital technologies and trust in the companies that use them.

What do you think about this, Professor Sandel?

Michael Sandel

I think it’s one of the biggest ethical questions we face today. If we don’t think through the ethical implications of new digital technologies, then companies will have problems. There will be a loss of trust, as you said, and citizens will view technology as a threat rather than as an opportunity. So it’s important to have public debates even about some of the hard ethical questions posed by digital technologies and that is what we’re going to do today.

How much are we willing to compromise our privacy?

Michael Sandel

Let’s begin with one of the big ethical questions we face today - the use of personal data. Now companies often offer services or benefits in exchange for our personal data. The question is: Are you willing to give your personal data in exchange for better service? Let’s begin with the question of health insurance. Now, you’re probably all familiar with wearable devices that measure how long you sleep at night, what you eat, whether you eat broccoli very often which is healthy for you, or how much alcohol you consume. Now suppose that this wearable device could measure all of these things, and would send that data to your health insurance company. In exchange for sharing that personal data, if your behavior is healthy, they will give you a big discount on your insurance policies. How many would wear the device to send the data to the insurance company? Those who say yes, raise the green card; those who say no, raise the red card, and we will see what people think.

About 70~80% of the audience seems willing to share their data. And on our panel six are willing to share, and two are not. Yuko, you indicated you would not take this deal. Tell us why?

Yuko Yasuda

There are two reasons. Although I like broccoli and I do yoga, I’m not comfortable to share my privacy with companies. I feel exposed - like I’m being watched wherever I go. The fear I feel outweighs the benefit of any discount. Also, if only healthy people are eligible for the discount, the end result might be, “You cannot get insurance because you have a genetic abnormality.” That kind of society seems quite frightening.

Michael Sandel

So let’s now hear from most of the panelists who are willing to send in the health data. Yasu, what do you think, and in response to Yuko’s point, would you also send in your genetic data?

Yasuhiro Sasaki

I feel that anything is okay if it actually makes the insurance cheaper. I don’t think data about my diet or sleeping habits will reveal anything significant, so I’m willing to provide such data if I can get something in return. And in the case of genetic information, if many people share their genetic information, it may contribute to the discovery of new therapies and advances in medicine.

Michael Sandel

All right, so you would share everything, because you don’t think it is absolutely an essential aspect of your identity. In that case, what type of personal data would you not want to share?

Yasuhiro Sasaki

I wouldn’t want to share private conversations that I have with my family, friends, and other people who are important to me. I wouldn’t want to involve these people.

Michael Sandel

Yuko will never send that information, even it is favorable, and Yasu says it’s okay because that kind of information is not personal. Ian, what do you say? You were willing to send it in.

Ian Bradbury

Yes, but I would expect the insurance company not to share my data, and to only use it for the purpose which was intended.

Michael Sandel

Let me ask you then, do you trust Facebook to keep your data private?

Ian Bradbury

I only put information that I’m willing to share on Facebook. I think it has value as a platform, but I don’t put all my information on it. That’s the difference between the insurance company and Facebook.

Michael Sandel

Is there anyone on the panel who doesn’t use Facebook? Yumiko...why don’t you use it?

Yumiko Kajiwara

I don’t like to post personal information somewhere that can be viewed by anyone.

Michael Sandel

Now I want to ask about another use of personal data. How do you feel about providing personal data to an automobile insurance company? What if the company offers you a discount if you put a device on your car that tracks how you drive, whether you exceed the speed limit, whether you brake suddenly, whether you make sharp turns, and what time of night you drive? Let’s get the audience and panelists to vote on this as well.

Now we’re seeing some disagreement. About 90% of the audience is willing to share their driving data, whereas only four out of our eight panelists are willing to do so.

Edmund, you were willing to share your health data, but you’re not willing to share your driving data. Why is that? Is driving more personal and intimate to you than your health?

Edmund Cheong

It is not that driving is more intimate, but I don’t think my driving data would result in a discount. It might even cause my insurance rates to go up instead.

Michael Sandel

Because you are a bad driver?

Edmund Cheong

No, I’m a reasonably competent driver. But I often have to drive at night, and I often drive long distances.

Michael Sandel

I see. So the data might not work in your favor. Who else would not send the driving data, but did send the health data? Mika?

Mika Takahashi

My reason is similar to Edmund’s. I’m actually a terrible driver, so I don’t think I would get a discount. Also, I’m concerned about the use of location data. I voted “No” because it scares me to think that I could be tracked everywhere I go.

Michael Sandel

So Mika, you consider location data to be personal just as Yuko considers health data to be personal. And Yasu said he doesn’t care if companies access his health data. But what about location data? Yasu, how do you feel about companies having your location data and knowing where you go? That seems quite personal.

Yasuhiro Sasaki

It’s personal, but I don’t see it as a problem because I trust the car insurance company not to leak that information. If I thought the company wasn’t trustworthy, I wouldn’t use its services.

Michael Sandel

Now, this issue about location data has come up in the debate about Uber, because they need to access users’ location data. For example, they did a study and found that a certain area was visited by many men late at night, and the suspicion arose that prostitution was going on in that area. Eric Schmidt, the former CEO of Google, said that if you don’t want people to know about something you’ve done, maybe you shouldn’t do it in the first place. This is a very sensitive issue. It is a question of ethics and privacy. What do you think about this, Yoshi?

Yoshikuni Takashige

It is certainly sensitive. It’s rare in Japan, but if a terrorist were to get the location data of a specific person, it would make it easier to kidnap or murder that person. Sharing can certainly be dangerous if you don’t know how a company will manage and handle your data.

Edmund Cheong

Speaking from my own perspective, I’m here in Japan now, but my wife and two children are in Malaysia. So I would worry about my family if people can easily find out when I am away.

Michael Sandel

Because your family is more vulnerable...

Edmund Cheong

Exactly! That’s my point.

Michael Sandel

So let me summarize what we’ve been saying. It’s clear there are certain types of personal data that we don’t want other people to know. One principle that has begun to emerge from the panel discussion is that we are bothered if the data is somehow deeply connected to who we are, to our personal identity. But it is also clear that people have different views about what sort of data that is.

How much do we trust AI?

Michael Sandel

I would like to shift now to another ethical question that arises about big data, AI, and algorithms. Do you think that AI can make better judgments than human beings?

Let’s take the practical example of a medical diagnosis. Research has already demonstrated that AIs are better at analyzing CT scans than human doctors.

Suppose a loved one was suffering from a serious illness and had a CT scan. Would you rather diagnostic analysis of the scan was performed by a doctor or by an AI? Hold up your cards to indicate your choice.

The audience is fairly evenly split between the AI and the doctor. Our panelists, with the exception of two people, say they prefer to have the AI make the diagnosis. Hazumu, you chose the doctor...why do you think the doctor is better?

Hazumu Yamazaki

As for statistical judgment, AI is superior, and the more case data we have in the future, the more accurate diagnoses will be made. But for now, I still want to rely on the doctor.

Michael Sandel

Who else voted for the doctor? Sebastian, you also chose the doctor...

Sebastian Mathews

I would want the doctor to review the results of the AI analysis, and then make a final diagnosis.

Michael Sandel

But why wouldn’t you just rely on the AI device?

Sebastian Mathews

An AI may be able to analyze the data, and be less prone to error, but I don’t necessarily think its reasoning can be trusted. I believe that the AI and the doctor should work together.

Michael Sandel

So you would ultimately rely on people to make the final diagnosis?

Sebastian Mathews

In the end, yes.

Michael Sandel

Hazumu? What do you think?

Hazumu Yamazaki

People have consciousness that is required for reasoning and that AIs don’t possess. An AI can only perform statistical analysis - it doesn’t really understand what it is doing. The process of human perception is totally different from the way a machine functions.

Michael Sandel

But in this case, we are only relying on the machine for CT image analysis...

Hazumu Yamazaki

I don’t believe we can trust AIs that much yet.

Michael Sandel

So you don’t trust machines then?

Hazumu Yamazaki

If they are used more widely, we will be able to place more trust on them. For example, autonomous driving is expected to help reduce traffic accidents significantly. But many people are still afraid of self-driving cars. It’s not just a matter of statistics, it’s a question of being able to accept it mentally.

Michael Sandel

Let’s look at another example - this time involving the evaluation of employee performance.

When performance reviews are carried out by human bosses, they are often subject to certain biases. An AI device, on the other hand, can evaluate performance using algorithms with clearly defined metrics. Putting yourself in the position of an employee, which would you rather be judged by, your boss or an AI device?

So it seems that almost 80% of the audience would love or trust their bosses more! Our panelists, on the other hand, are evenly divided. Yumiko, why did you choose the AI?

Yumiko Kajiwara

I thought the AI would be more objective and fair, whereas people tend to have likes and dislikes that might affect their evaluation. I don’t think the AI would be subject to such biases.

Michael Sandel

So you think it would be more objective.

Yumiko Kajiwara

Human judgements are inevitably more subjective. And the evaluation might be affected by the relationship between the boss and the employee. I guess many of the people in the audience are in positions to perform the evaluation.

Michael Sandel

You are in a position where you have evaluated a lot of employees yourself. It sounds like you are almost mistrusting your own judgements you have made in the past?

Yumiko Kajiwara

It may sound that way, but I have tried my best to evaluate my subordinates’ work objectively. It’s just that if I put myself in the position of the one being evaluated, I think I would choose the objectivity of the AI.

Michael Sandel

Yuko, you chose the boss. What do you say to Yumiko’s argument about objectivity, overcoming bias?

Yuko Yasuda

I don’t think an AI can understand context. Even if a project doesn’t go as planned, employees may have made important contributions that are not reflected in the numbers or data. For example, there are people who provide mental support and boost their coworkers’ spirits when a team’s mood goes down. I doubt if an AI can properly evaluate those contributions.

Yoshikuni Takashige

Let me say something here. I think it is very doubtful that any of us can evaluate our work strictly by the numbers, compressing it all into metrics that can be measured objectively. There are invisible elements that can only be measured by human sensitivity. It’s true that if a relationship is bad, it will negatively affect the evaluation. But in a way, that is a realistic reflection of the workplace relationship.

Michael Sandel

So you’re suggesting that with many jobs, it may not be possible to evaluate performance with the metrics that an AI can measure. So how can we evaluate complex human skills that cannot be assessed with such metrics? Ian, you chose evaluation by a human boss. What are your thoughts?

Ian Bradbury

I wonder if an AI can properly evaluate employees who play supporting roles on a project. And I don’t think it can effectively take into account social perspectives like inclusivity in the workplace.

Michael Sandel

Yumiko, what do you think?

Yumiko Kajiwara

We use both absolute and relative evaluation methods. For absolute evaluation, it is important to identify and consider personal merits that numbers cannot reveal as well. In the case of relative evaluation, however, we have to rely on numerical values as a basis for comparison. In the end, the best solution may be that human bosses make a final evaluation incorporating the AI’s evaluation. To be honest, I also want to have personal words of encouragement from my boss.

Michael Sandel

Edmund, what do you think?

Edmund Cheong

There is some truth in what Yumiko said, but I think AI always comes first. For example, a young, hard-working employee may put in long hours, but because he is self-effacing, his efforts may not be visible by his boss. So it is important for the skills and work of such a person to be evaluated objectively. We have used predictive AI models, and have found them to be free of emotional bias. The important thing is to give everybody an equal opportunity.

Michael Sandel

So emotion gets in the way of reason and judgement?

Edmund Cheong

Exactly! If I argue with my wife in the morning, I may give a bad review to someone because of getting emotional.

Michael Sandel

So emotion always destroys judgement?

Edmund Cheong

Yes. We are made of biological chemical substances. We are influenced by our hormones.

Michael Sandel

Alright, we are getting into an important issue here - the idea that emotion always interferes with good judgment, so better to use the AI machine which is emotionless. Who disagrees with that? Hazumu, what about you?

Hazumu Yamazaki

I work in the field of emotion analysis. Clinical cases are revealing that people actually cannot make decisions without emotion. In his 1994 book Descartes’ Error, Antonio Damasio describes about a person struck in the head by a spear. The damage to the frontal lobe of his brain became incapable of making any decisions because he had to rely on logics only. Recent philosophical thinking also suggests that emotions are central to our ability to make choices.

Michael Sandel

To make a good decision, do you need emotion?

Hazumu Yamazaki

That is what I think.

Michael Sandel

In our discussion so far some panelists have expressed the opinion that AI’s judgement is good because it is objective and unbiased, while others have suggested that emotions actually play an important role in judgement. I would like to test these ideas by considering another question, one that deals with matchmaking.

Let’s imagine an AI-powered application that has analyzed massive amounts of data and produced a shortlist of three people that it predicts to be your best lifetime partners. In choosing someone to marry, would you trust the AI’s recommendations, or would you trust the advice of friends and parents?

The audience seems to generally favor the AI. On the panel, we have five who chose the AI, two who chose friends and parents, and one who did not choose either. Yuko, you chose friends and parents. Can you tell us why?

Yuko Yasuda

Rather than my parents, I would seek the advice of my friends. Mainly because I think AI matchmaking would place greater emphasis on factors like educational background and income, and less emphasis on human traits that are hard to quantify. I myself don’t want to be judged by such quantifiable factors, and I don’t want to live in a society that believes those factors should be the basis for determining if someone is a good match. It’s not romantic at all. Our life can be more fulfilling, if we happen to meet somebody. I feel unexpected personal chemistry is more important. There’s no joy in it if my potential partner’s suitability is based on data.

Michael Sandel

Okay, data lacks surprise, and in romance surprise is important, not data.

Does anyone disagree with Yuko’s argument? What about you, Yasu? Is there anything you can say to change Yuko’s mind?

Yasuhiro Sasaki

Depending on how the data is gathered, I think it is possible for an AI application to evaluate a wide range of personality traits. Your friends may be able to introduce 100 potential candidates to you, but an AI allows you to choose from 100,000 or 1,000,000 potential candidates. Wouldn’t that be better? You could even program the AI to surprise you, and it might match you with someone you never imagined.

Michael Sandel

Wait a minute, Yasu. Does that mean that you program the algorithm to choose someone who does not seem a good match every 30 or 100 times? What does it mean to program ‘surprise’ into an algorithm?

Yasuhiro Sasaki

For example, Candidate A might seem to be the most compatible and the best match for you based on the life you have led so far. But there might be a Candidate B who - although they may not seem like a perfect match based on the life you have led so far - has interesting qualities and may take your life in a whole new direction. In other words, you can program your expectations of spontaneity into the AI individually. I think that probably can be done.

Michael Sandel

What do you say, Yuko?

Yuko Yasuda

If those kinds of multifaceted human characteristics can be entered in the program, then I admit its matchmaking accuracy would probably improve. But marriage involves a long-term commitment to a partner, and I wonder if the program can reasonably predict the future for the next 30 years based on past data. In that respect, I think it’s better to rely on intuition rather than data.

Yasuhiro Sasaki

Well, one out of three Japanese couples get divorced anyway, so intuition may not be the best guide either (laughter).

Michael Sandel

Yoshi, earlier you questioned whether a job performance could be captured by a single metric. Do you think factors like “romance” and “surprise” can be incorporated into a matchmaking program?

Yoshikuni Takashige

I don’t think AI can measure what is working in the deepest place of the human mind. We’ve talked a lot about emotions today, and although AI technology can be useful in suggesting potential life partners, it cannot reach the core of the human soul.

Michael Sandel

Now let’s ask a slightly different question. Japan is experiencing a declining marriage rate and birth rate - and as Yasu mentioned, a rising divorce rate. What would you think of the idea of the government sponsoring a program to support AI-based matchmaking in hopes of increasing the marriage rate and possibly the birth rate? Would you be for it or against it?

By a narrow margin, the audience seems to be for it. But most of our panelists disagree. Mika, why are you against it?

Mika Takahashi

I really dislike the idea of an AI choosing my marriage partner. In the first place, I’m not sure how much an AI can really know me, or what kind of data it will use to choose a partner. I would trust the advice of a good friend more than an AI. I don’t want an AI to make decisions for me.

Michael Sandel

Edmund, you seem to be consistently in favor of using AI...

Edmund Cheong

I trust humanity, of course, but I still support the use of AI. Nowadays, people spend a lot of time immersed in a virtual world. Just looking around Tokyo Station, you can see that most of people keep staring at their smartphones. All over the world, people are no longer interacting face-to-face.

Michael Sandel

Is that a good thing or a bad thing?

Edmund Cheong

It’s a bad thing, of course. I believe in people, but humanity as whole seems to be moving from the physical world to a digital, virtual world. So if digital can help create romance, I’m for it.

Michael Sandel

Create romance digitally?

Edmund Cheong

You can’t fall in love digitally, but digital technology can create opportunities to fall in love.

Michael Sandel

Mika, do you want to reply?

Mika Takahashi

I don’t think love can be digitalized. I don’t think data can address all the issues involved, and I’m sure there are feelings, preferences, and choices that people make unconsciously with respect to the opposite sex that are too complex to digitalize.

Can virtual immortality ever be realized?

Michael Sandel

Edmund has made the bold claim that people are moving from a physical world into a virtual world, and we should therefore translate human experience and judgment in a way that takes the digital world into account.

The way we think, the way we feel, the reactions we have to people, to events, to our life circumstances, a lot of that are increasingly part of our digital existence. Now, suppose it was possible to aggregate every single email we send, every tweet we make, and every interaction, comment or opinion we express on social media. And that this aggregated data could be used to create digital avatars - digital twins - of people. These digital twins would live on even after the person’s death, preserving their personality, the way they thought and felt, their preferences and opinions. If we can create these digital twins, they can effectively achieve a kind of virtual immortality.

After a person’s death, surviving friends and family would be able to converse with these digital twins. They would be able to ask them their opinions not just about past events, but even about things happening in the present. Would you want to communicate with such a digital twin? Would you want to converse with the digital twin of your loved one? Or do you find the idea creepy such that you wouldn’t want to do that?

About one-third of the audience would like to have such a conversation, and two-thirds would not. On our panel, all but two say they would not. Sebastian, you answered that you would like to have such a conversation. Who would you like to talk to?

Sebastian Mathews

My ancestors. I would like to speak to the ones I have never met. But at the same time I would be afraid to see their consciousness be trapped inside a digital prison. I would feel sorry for them.

Michael Sandel

Mika, you wouldn’t want to have such a conversation...why not?

Mika Takahashi

It might be interesting to talk with an ancestor I’ve never met. But I think a key difference between the living and the dead is that the living are constantly changing. They live in an environment that changes from moment to moment, so their opinions and thinking are always in flux. But the digital twin remains completely unchanged from the point at which the person died.

Michael Sandel

Wait, I wonder if that is true. With machine learning, the digital twin could continue to learn and address new situations. So it would be possible that the digital twin could infer from the opinions they had on past affairs and react to a new situation in the family. If that were possible, would you want to speak to it?

Mika Takahashi

But there is a randomness to human behavior that enables people to suddenly start doing something different that cannot be predicted from their past behavior. So even if the digital twin learns from both past and present data, it cannot behave like a living person. It is not the same as the person who died, but something else.

Michael Sandel

Yumiko, what do you think? In most of the scenarios we’ve discussed today, you voted in favor of AI. Why did you vote against it this time?

Yumiko Kajiwara

I believe we have to accept death unflinchingly. It must be accepted as a fact. Only then we can move forward and think about what to do.

But when I talked with people working for a Japanese company, they said they wished they could ask their company founder how he would navigate the chaotic age we currently live in. If there were a digital twin of the founder, he might be able to advise them. I have heard similar ideas from people of other companies as well.

Although I want to accept the death of my own relatives as a fact, I am interested in the possibility of talking with the deceased leader in the context I just mentioned.

Michael Sandel

Hazumu, what about you?

Hazumu Yamazaki

Well, in Japan we have spirit mediums called itako who are said to be capable of communicating with the dead (laughter). But because digital twins would not actually be conscious beings, their responses in any conversation would be essentially automatic. I wouldn’t really be able to empathize with them, and would probably feel a sense of emptiness. To me, it’s more important to care for the living, so I personally wouldn’t be interested in talking to a digital twin. However, as for hearing the opinions of a long-deceased founder, I think it would be acceptable if it was made clear that those opinions were generated by an AI. Because in that case there would be no emotional investment, and no need to establish an empathic human relationship.

Michael Sandel

At the start of our discussion today, some people said that AI had no subjectivity or emotion, so its judgments are objective and fair, but later on, more and more people said that emotion, affection, and consciousness are important values for human beings.

Yoshi, we’ve talked extensively about AI, and we’ve discussed human love, life, and death. I think it’s time to wrap things up. What do you say?

Trust and corporate social responsibility

Yoshikuni Takashige

We had a lot of discussions today, and as we sought answers to seemingly simple questions, the discussions deepened in surprising ways. There were many different opinions expressed, and no simple solutions. It is clear that the issues raised need to be discussed, not superficially, but openly and in great depth. I believe the most important thing is the ‘invisible value’ that we don’t usually notice. In order to discover it, we have to dive deep into our minds. That is what business leaders must address in this era of digital transformation. In fact, through today’s discussions, we’ve come to realize there is a potential that even love and the thinking of a person can be digitized.

One thing I would like to ask Professor Sandel is: when collaboration between humans and AIs becomes commonplace, and AIs can make decisions about a wide range of things, what areas should humans keep as our most important and exclusive domains? What are the guiding principles?

Michael Sandel

Well, I think some of the guiding principles emerged from today’s discussions. One guiding principle is that for any use of digital technology to substitute for human judgement or supplement human judgement, whether we are talking about employee evaluations, medical diagnosis, or matchmaking, we need some sort of pattern or metric to guide the algorithms we employ. And this raises a big philosophical question, because there are certain kinds of human judgement that cannot be measured by such patterns or metrics. Our panelists today mentioned about romance, emotions, affection, and respecting the boundary between life and death as examples.

But the boundary of practices which cannot be measured by patterns or metrics, and which necessarily involve human considerations, consciousness, affection or romance, is not really so clear. What about employee performance evaluations, for example? There were differences of opinion on this subject in the audience and among our panelists. That is why this type of debate is so valuable. I don’t think we should approach this as if we can find one principle that we can apply to all of these questions. Because all these debates are about very complex human issues - making a medical diagnosis, choosing a life partner, and communicating with a loved one. These debates will continue, and what matters is that we address these questions deliberately, and not expect that technology can decide them for us.

Yoshikuni Takashige

At Fujitsu, we have a human-centric philosophy that puts people at the center of everything. Every decision must be made according to this principle. Today’s discussions have reminded me that we need to expand and deepen this human-centric philosophy, so that it fully encompasses emotions and other invisible human values that we have to use for judgements.

Well, we’re running out of time, but before we close, I would like to ask a question that is particularly relevant to the business leaders here in our audience today. In these chaotic times, what kind of practical leadership is needed to ensure sound, ethically responsible decision-making?

Michael Sandel

First of all, I think business leaders need to engage with society and proactively debate the ethical questions, because they involve fundamental human values, not just numbers and metrics. Secondly, I think it is important for business leaders to recognize that everyone including themselves is a citizen, and a human being with human reactions of his or her own as you have seen in voting. Lastly, the discussion about AI and others may be seen as a question of technology. But actually, the more deeply we investigate these dilemmas of technology, the more we find that these are really debates about us, about what it means to be a human being. Therefore, the challenge of new digital technology provides an opportunity for us to reflect more deeply what it is to be human.

Yoshikuni Takashige

Businesses involve corporate managers, employees, customers, consumers, and ecosystem partners, but they are all human beings and members of the same community. And if we can approach to businesses from such a human-centric point of view, we will be able to make right management decisions. Is that right?

Michael Sandel

I would say business in this digital age raises these big questions about technology. And it is not possible to take an ethical stand without engaging in these rather deep questions.

Yoshikuni Takashige

It comes down to a question of trust between people, and between people and companies. If we can establish a strong bond of trust among various stakeholders, that is a truly meaningful achievement.

Michael Sandel

Yes. But it is important to remember that building trust does not mean agreeing on everything. In fact, if anything, it is the opposite. It means you are willing to listen to opinions that disagree with your own - as the members of our panel did with one another - and respect those opinions and think them through. Trust for others and respect for disagreement with values are much more important than trying to get everybody to agree.

Yoshikuni Takashige

Thank you very much. One last question...can you tell us what role and responsibility you think a technology company like Fujitsu should play? What are your expectations for Fujitsu in this digital era?

Michael Sandel

My answer is very short. The biggest responsibility of a leading technology company in this digital age is to encourage and promote debate and discussion on big ethical questions, not just within the company, but also throughout society as a whole.

Yoshikuni Takashige

Professor Sandel, thank you very much.

As the interactive discussion about trust in the digital age drew to a close, the packed hall erupted in applause.