Kawasaki, Japan, April 02, 2015

Fujitsu Laboratories Ltd. today announced the development of software that monitors transmission packets and analyzes quality in real time at a world-record speed of 200 Gbps.

With the advance of cloud services, brought on by the spread of smartphones and tablets and the expanded use of datacenters, it is increasingly important to have a firm grasp of service quality to ensure the overall operational stability of systems. Until now, however, when monitoring communications packets with commodity hardware, it was difficult to analyze service quality at high speeds because of limits in the performance of CPUs and memory access.

Now Fujitsu has developed software that runs on a single piece of commodity hardware to monitor communications packets and analyze network and application quality in real time at speeds of 200 Gbps, a ten-fold improvement in performance. This was accomplished by technology that reduces computational load when collecting packets, technology that eliminates the need for memory copying and mutual exclusion, and parallel processing technology that prevents contention when using multiple cores.

Together, these technologies make it possible to provide network infrastructure that supports the stable use of services without requiring expensive, specialized equipment, allowing for lower costs.

Background

With the advance of cloud services, brought on by the spread of smartphones and tablets and the expanded use of datacenters, the quality of services using communications networks is increasingly important. Realistically, however, there can be unforeseeable service quality degradation due to increasing volumes of data on the network and complex system architectures. Quickly detecting and recovering from service quality degradation requires real-time analyses to identify whether the cause of the problem was a network-quality issue or an application-level issue, such as response time. To analyze network and application quality, there is a need to take a detailed look at the behavior of each communications packet.

Technological Issues

Communications speeds on networks get faster every year. One hundred Gbps Ethernet, or 200 Gbps when combining upstream and downstream transmissions, has already been deployed on backbone carrier networks, and even in datacenters, systems are being constructed that use multiple 10 Gbps ports, or 20 Gbps with combined upstream and downstream communications. A faster network transmits more packets, which demands more processing power.

But the maximum number of packets that can be received and transmitted per unit of time depends on the limitations of CPUs and other hardware components. Meanwhile, packet collection and network- and application-quality analyses, which depend on memory-access performance, suffer from inter-process memory copying delays. These problems have meant improved processing performance has been difficult to achieve.

About the Technology

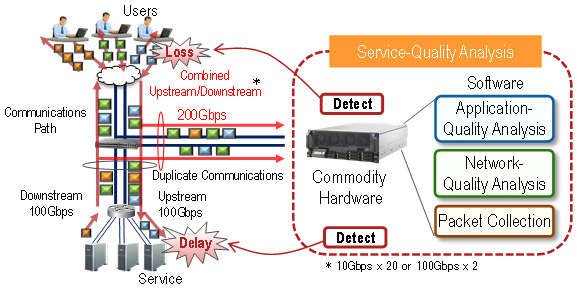

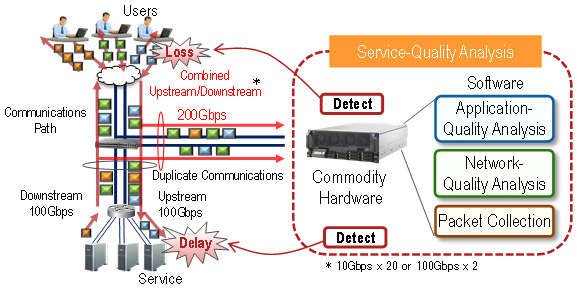

Fujitsu has developed technology that makes it possible to collect packets at 200 Gbps and to perform network- and application-quality analyses all with software, eliminating the need for expensive, specialized hardware (Figure 1). For network-quality analysis, this calculates the communications traffic volume on the network and detects packet loss and network latency. For application-quality analysis, this calculates the communications volume for each service and detects the response delay of each application providing a service. Combining these quality analyses together makes it possible to identify sources of service delays and their locations.

Figure 1: Diagram of 200 Gbps service-quality analysis

Figure 1: Diagram of 200 Gbps service-quality analysis

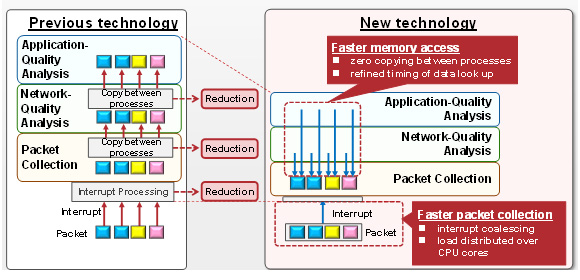

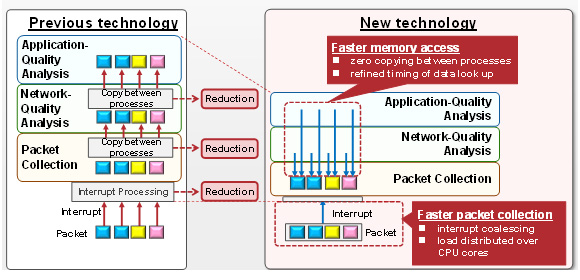

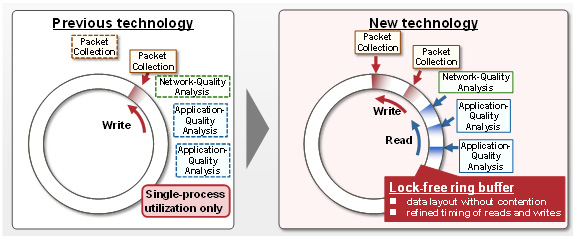

Features of the technology are as follows (see Figures 2, 3)

- Faster packet collection

Fujitsu improved the performance of packet-collection processing by coalescing the interrupts that are generated as each packet arrives, which reduces the number of processing iterations, and by distributing interrupt processing over multiple CPU cores, where the CPU halts other processes as new interrupt processes are accepted.

- Faster memory access

Fujitsu refined the methods and timing for looking up data between packet-collection and quality-analysis processes, making it possible to look up data without copying packets or analysis data, while avoiding the problem of writing to regions of memory that were already in the middle of a write or lookup operation. This obviates the need for copying and for mutual exclusion.

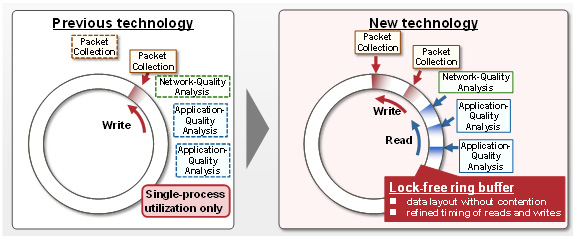

- Parallel processing

There is no need for mutual exclusion, so multiple analysis processes running on multiple CPU cores can access the same ring buffer so that, even with multiple CPU cores, there is no contention, and analysis performance can scale in a linear manner in accordance with the number of CPU cores.

Figure 2: Technologies for faster packet collection, faster memory access

Figure 2: Technologies for faster packet collection, faster memory access

Larger View (136 KB)

Figure 3: Parallel processing technology that enables effective use of multiple cores

Figure 3: Parallel processing technology that enables effective use of multiple cores

Larger View (100 KB)

Results

This technology was able to boost performance ten-fold, making it possible to monitor packets flowing over high-speed networks at 200 Gbps and to analyze network quality and application quality in real time using only software and commodity hardware, rather than expensive, specialized hardware. This makes it possible to inexpensively identify the causes and locations of service-quality degradations.

In addition, because sender information is kept in logs, this can be used to track the sources of cyber attacks and servers under attack. Moreover, because it is possible to track bandwidth needs on a per-application basis, infrastructure can be built out as needed.

Taken all together, this technology can be used to provide network infrastructure that supports the stable use of services, delivering faster resolution of troubles, stronger security, and faster provision of services.

Future Plans

Fujitsu is proceeding with R&D and verification testing of quality analyses with the goal of including this technology in a commercial product during fiscal 2015.

![]() E-mail: 200g-analyze@ml.labs.fujitsu.com

E-mail: 200g-analyze@ml.labs.fujitsu.com