Kawasaki, Japan, October 09, 2013

Fujitsu Laboratories Ltd. has announced development of the world's first 3D image synthesis technology for advanced driver-assistance systems. The new technology can display, without distortion and with an accuracy level within two centimeters, people and objects within close proximity of the vehicle that pose a risk of collision

In previous commercially available products that improve a driver's field of vision, images from multiple onboard cameras are joined together into an overhead view using image processing, but distortions in this view make people, vehicles, and other objects in the vehicle's vicinity difficult to recognize. Fujitsu Laboratories has used range information from wide-angle laser radars to augment the cameras, correct the distortion, and present the driver with a view of the surroundings that is much easier to recognize and that shows collision risks visually, day or night. When parking or passing on narrow roads, this technology will increase safety and provide reassurance to the driver.

This technology will be exhibited at Fujitsu's booth at ITS World Congress Tokyo 2013, opening October 15 at Tokyo Big Sight.

Background

As exemplified by the Kids Transportation Safety Act(1) in the United States, there is worldwide recognition of the importance of vehicle camera systems. Commercially available systems give drivers a better view when pulling into or out of parking spots, using either a rear-facing camera or multiple cameras mounted around the vehicle, which, together with image-processing techniques, provide a synthetic overhead view. In combination with ultrasonic sensors and other collision-detection devices, systems available today can detect the presence of nearby obstacles and alert the driver audibly and visually.

Issues

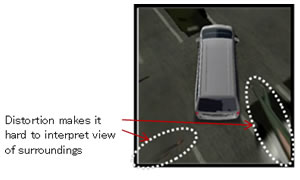

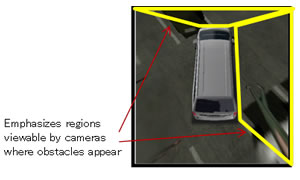

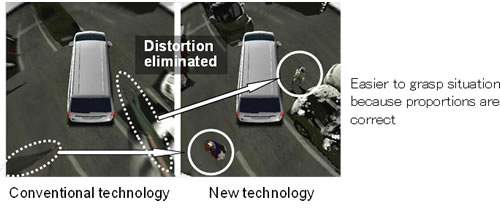

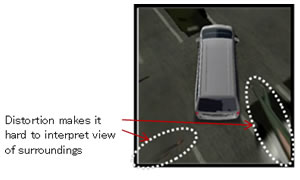

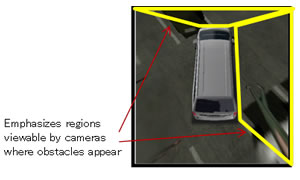

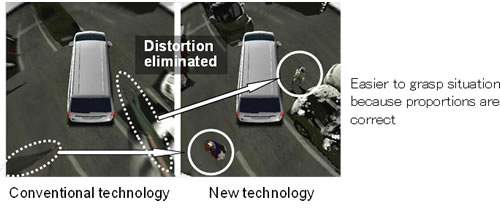

In existing multi-camera systems, distortions in the synthetic image can make it difficult for the driver to recognize nearby people or objects, such as pedestrians or parked vehicles. As a result, it is difficult for the driver to get an intuitive grasp of the surroundings, and to gauge the distance to objects (Figure 1). Even when these systems are combined with sonar (ultrasonic) sensors, because the sensors' spatial resolution is poor, within the distorted image the driver gets only a very rough view of the danger zone. This makes it difficult for the driver to instantly discern the situation when objects enter the sensor field and trigger an alarm (Figure 2).

Figure 1: Conventional composite images

Figure 1: Conventional composite images

Figure 2: Conventional sonar-based obstacle detection view

Figure 2: Conventional sonar-based obstacle detection view

About the Technology

To solve these problems, Fujitsu Laboratories has developed a system that includes four onboard cameras facing front, rear, left, and right, as well as 3D laser radars that produce high-resolution range information covering an extremely wide angle. The result is the world's first 3D image synthesis technology that overcomes image distortion and clearly shows where the risks of collision are. Some of the technology's key features are as follows.

1. A 3D virtual projection and point of view conversion technology using multiple laser radars and cameras

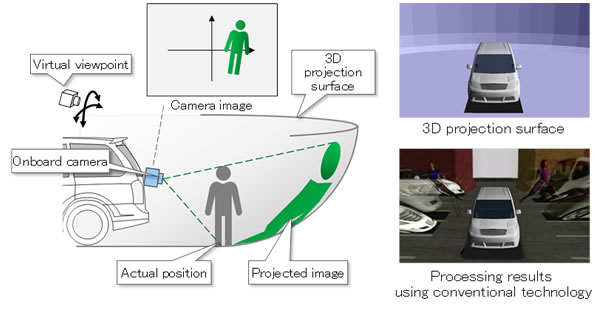

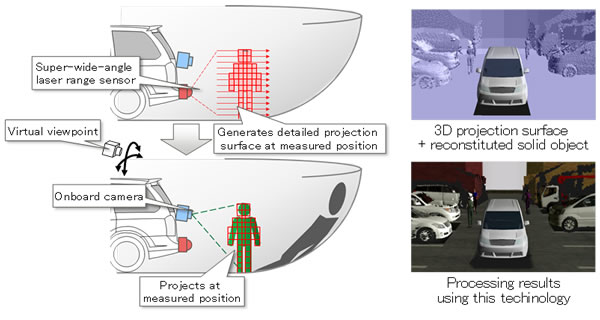

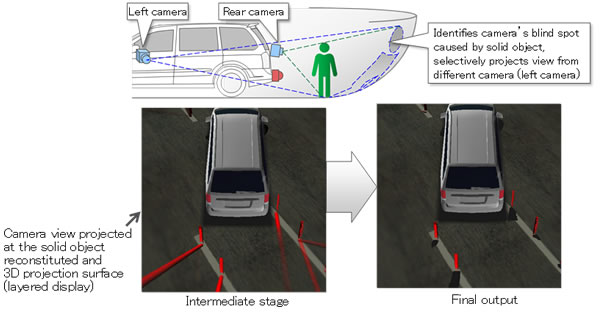

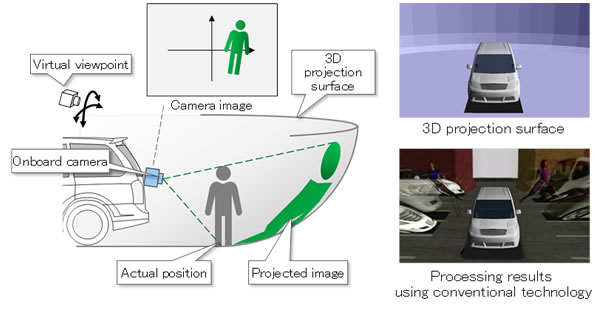

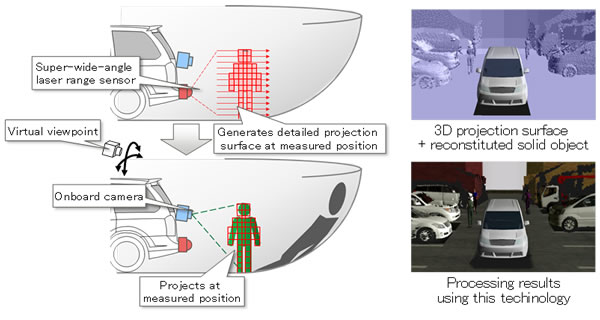

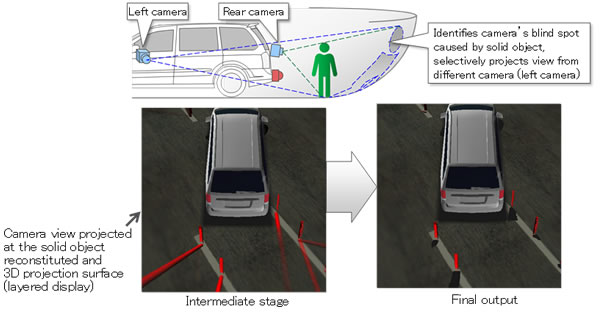

Building on Fujitsu's existing wraparound-view monitor technology, this new technology places a virtual 3D projection of the area surrounding the vehicle, then generates a detailed projection of 3D objects based on range information collected from laser radars. A synthesized range information model can then be generated as images collected from cameras can be projected onto the 3D projection (Figure 3). The system takes into account the exact position and angle of each camera and laser radar, and can determine what parts of surrounding objects are in the blind spot of each camera so it can selectively project the view from different cameras to fill in those blind spots. This produces a synthetic image that is more natural than could be achieved with simply one laser and camera set (Figure 4).

Figure 3a: Principle behind conventional technology (surrounding object monitor technology)

Figure 3a: Principle behind conventional technology (surrounding object monitor technology)

Figure 3b: Principle behind new technology

Figure 3b: Principle behind new technology

Figure 4: Compensating for camera blind-spots caused by solid objects

Figure 4: Compensating for camera blind-spots caused by solid objects

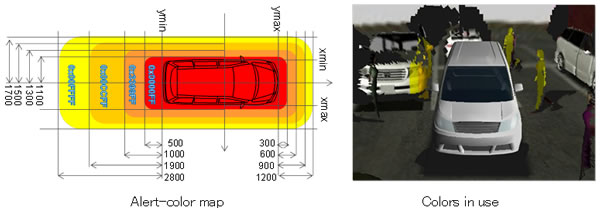

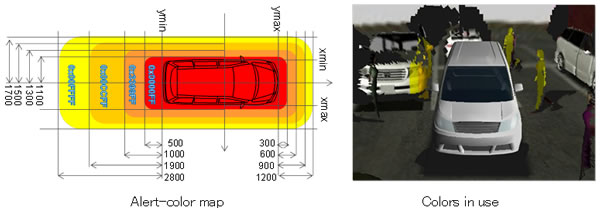

2. Transparent superimposition technology for collision risk detection

Using range information from the 3D laser radars, which have high spatial resolution and work equally well in light or dark, the system can display a superimposed transparent color on top of nearby objects. The color of the layer corresponds to the extent of how high risk an object is. When processing the imaging of restored 3D objects, the system takes into account vehicle speed, turning angle, and other vehicle factors. It uses an alert color-map which is based on distance, direction of travel and orientation to indicate collision risk (Figure 5). The system can estimate people or objects at collision risk using the laser radar's precise range-measuring function (approximately 2 cm).

Figure 5: Transparent color overlay indicates degree of collision risk

Figure 5: Transparent color overlay indicates degree of collision risk

3. Onboard software technology

The technology to synthesize the 3D image was developed as software that can be run on an in-vehicle embedded platform with a graphics-processing unit (GPU) that supports the standard graphics-processing platform OpenGL ES(2).

Results

The ability to display the exterior of a vehicle without distortion means this technology will make it easier for drivers to intuitively get a sense of their surroundings, including a sense of distance to other objects, whenever encountering pedestrians, other vehicles, or other objects. This will be helpful in a number of driving contexts, including parking and passing through narrow roads (Figure 6). In addition, superimposed color-coded layers indicating proximity to other objects, day or night, will enable heightened awareness of collision risks, making it easier for drivers to instantly understand the situation when an alarm is issued (Figure 7).

Figure 6: Easier to interpret

Figure 6: Easier to interpret

Figure 7: Highlighted collision risk

Figure 7: Highlighted collision risk

Future Plans

Fujitsu Laboratories is conducting tests to verify the effects of 3D virtual projection and point of view conversion technology to assist in improving a driver's visual field in a variety of driving contexts, and aims to commercialize driver-assistance system products using this technology. It is working on lightening the system's processing load for use in embedded vehicle platforms, and plans to move forward on the development of technologies that recognize the surrounding environment using cameras and laser radars, with applications that lead to more convenient awareness-support and self-driving systems.

![]() E-mail: omni3d@ml.labs.fujitsu.com

E-mail: omni3d@ml.labs.fujitsu.com